There are several ways to approach a data integration project, but how can you tell which method is best suited to your organisation’s specific goals and environment?

In our previous article, we explored the concept of data integration, key process steps, and the business value it offers. This time, we’ll focus on the most commonly used integration techniques, and help you determine which one is the right fit for your business.

Will you join us? Inside the article!

Data Integration Techniques

Like on any journey, there are different routes that can lead you to the final destination. The same applies to data integration; there are different alternatives to carry out the project. Let's take a look at them:

1. ETL (Extract, Transform, Load)

ETL is one of the most established integration methods. It involves extracting data from multiple systems, transforming it into a consistent format based on business logic, and then loading it into a destination such as a data warehouse. It’s particularly effective for handling large volumes of data in batch processes.

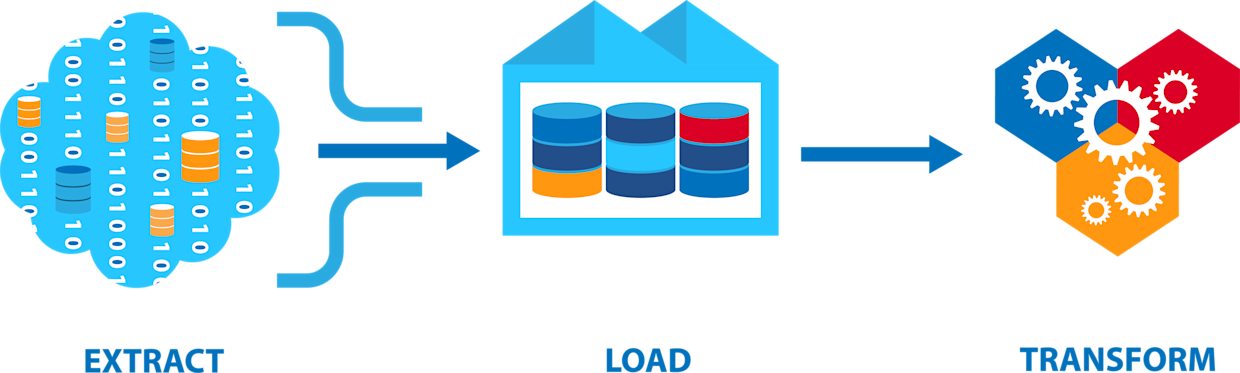

2. ELT (Extract, Load, Transform)

A variation on ETL, ELT reverses the process slightly. Data is first loaded into the target warehouse, and transformations are performed there, typically using SQL or built-in tools. This is ideal for modern cloud-based platforms with strong processing capabilities.

AWS provides insights into the differences between ETL and ELT: ETL vs ELT - Difference Between Data-Processing Approaches.

3. API-Based Integration

This approach involves the use of APIs. But what are APIs exactly? Well, they're Application Programming Interfaces that allow different software programs to interact with each other. Put simply, APIs act as the language that applications use to communicate with each other. These rules enable different software to interact, connect, and exchange data in real-time. This way, existing infrastructures are leveraged, saving time and resources. APIs aren't anything new; they've been around for years. However, with the evolution of technology, Data Science, and Artificial Intelligence, they've undergone a makeover. Initially, they were just an internal interface for communication between applications within the same company. But now, they serve as a means to interconnect applications and data with third parties. Therefore, APIification is a business model based on an interface that allows one application to interact with another. Let's take an example to understand it better: Samsung's Smart Home allows us to control household appliances and devices through a mobile phone. From the TV to the washing machine, everything can be interconnected. And this is possible thanks to an API operating through the cloud. Now that we know what it's all about, going back to the main topic, API-based data integration is often used for cloud-based applications seeking maximum efficiency and agility and can be more efficient than batch processing.

4. Federated Integration

This approach involves creating a virtual data reality across different systems, without physically moving or consolidating them. Federated integration provides real-time access to data across different systems, but be careful, it can be complex to implement and maintain.

5. Data Virtualisation

This method creates a virtual layer that integrates data from various systems into a unified view. Data virtualisation consists of creating a virtual data management architecture that sits on top of traditional Datawarehouses and accesses and integrates data from multiple sources, providing a unified view. Thus, it generates access to a single logical layer of information, facilitating its understanding and obtaining valuable insights. It can be used to integrate quickly without the need for physical consolidation, or ETL processes.

How to Determine the best Data Integration approach for your Company?

The right method depends on your specific data landscape, integration goals, and internal capabilities. Below are some key factors to consider when deciding which approach to adopt:

Define your integration requirements: Start by identifying your data sources, business objectives, and the expected speed and frequency of integration. Determine what level of data governance and quality is needed.

Evaluate data complexity: Consider the variety of formats, models, and sources involved. This will influence whether real-time or batch processing, or a mix, will be the best fit.

Assess our infrastructure and team: It's time to assess the resources you have available, including IT infrastructure, data integration tools, and qualified personnel.

Evaluate cost implications: The fourth step is to examine the implementation and maintenance costs of the data integration approach, including software licenses, hardware, and personnel costs.

Select the approach that aligns with your goals: Based on the above factors, it's time to evaluate the different data integration approaches we've discussed earlier and determine which one best suits your specific needs and objectives. Your company may also choose to use a combination of different approaches to achieve better results. Tip: It's important to carefully evaluate the different techniques and consider the costs and benefits of each before making a decision.

In summary, in this exciting adventure towards data integration, we've explored different approaches and techniques that will allow you to unify and leverage your information successfully. And as in any journey, choosing the right path is crucial to achieving your goals. To make the right choice, you'll need to identify your company's requirements, examine the complexity of your data, evaluate the resources at your disposal, and consider the costs of implementing and maintaining the technique you choose.