The financial services industry has spent the last year experimenting with AI. Now, as we approach 2026, I'm seeing the conversation shift from "what's possible" to "what's production-ready." For senior leaders in asset management and custody banking, the question is no longer whether to adopt AI, but which capabilities will become business-critical in the months ahead.

From Experimentation to Business-Critical: The Rise of Agentic AI

2025 marked the year of AI experimentation across banking. Firms rushed to explore how AI could automate processes, accelerate results, and enhance client experiences. Banking companies developed internal AI solutions grounded in vast libraries of research reports and documents, testing the boundaries of what these technologies could deliver.

But I believe 2026 represents a fundamental shift. The transition from experimentation to business-critical utility is being defined by Agentic AI: systems that possess true "agency." Unlike the static Large Language Models that dominated 2023, these agents can execute multi-step tasks across disparate banking systems without constant human intervention. The financial impact is substantial: McKinsey projects gross cost reductions of up to 70 percent in certain categories, with net aggregate cost decreases of 15 to 20 percent.

In custody banking, I'm seeing this manifest as autonomous corporate action processing. Platforms like our partner All Mates can now parse complex, multi-jurisdictional announcements, reconcile them against client holdings, and execute actions with minimal oversight.

In asset management, we're witnessing synthetic data generators using Generative Adversarial Networks (GANs) to create "black swan" market scenarios that stress-test portfolios beyond historical precedents. The Global Synthetic Data for Banking Market is expected to reach USD 14.36 billion by 2034, up from USD 3.55 billion in 2024 - a clear signal of where the industry is heading.

Where AI Tools Are Making the Biggest Impact in Financial Services

Operational and Reporting Automation

In my experience working with banking clients, operational and reporting automation are delivering the most immediate value. We're using AI to execute and deliver faster than ever, but now with genuine intelligence behind the speed. Bank of England research reveals that 55% of all AI use cases involve some degree of automated decision-making, with 24% operating semi-autonomously.

The pressure to adopt is intense. A 2025 IBM study found that 55% of business and financial markets CEOs say the potential productivity gains from automation are so significant that they must accept considerable risk to remain competitive.

I'm seeing practical applications already transforming daily operations. Tools are now mapping unstructured internal data directly to regulatory reporting, dramatically reducing the "reporting tax" that has historically burdened financial firms. Vector databases enable real-time risk exposure aggregation across asset classes, moving away from the batch processing that dominated the last decade. AI generates bespoke, institutional-grade performance commentaries for thousands of individual mandates simultaneously, ensuring compliance with requirements for "clear, fair, and not misleading" communication.

The Next Wave: Conversational Analytics

As automated reporting becomes standard, I'm watching the market demand more from AI in related tasks. The question shifts from "Can we generate reports?" to "Can we analyse them intelligently?" The answer is increasingly yes.

Gartner's 2025 Analytics Trends Report projects that by 2026, 70 percent of analytics interactions will be conversational- a shift that democratises data literacy across business functions. AI tools like our partner Veezoo use Knowledge Graphs to identify connections between seemingly unrelated data, enabling the detection of "hidden risk" across portfolios that traditional analysis would miss.

The Regulatory Reality: What You Can Actually Deploy

The Adoption-Risk Balance

The enthusiasm for AI in financial services is undeniable, but I've observed it's tempered by regulatory realities. According to IBM's 2025 Global Outlook for Banking and Financial Markets, 60% of banking CEOs acknowledge they must accept significant risk to harness automation advantages, yet 78% of banks still take only a tactical approach to generative AI.

A Bank of England survey reveals that 72% of firms are now actively using or developing AI, with that number expected to triple in the coming three years. However, this accelerated deployment amplifies key risks. Firms identified data bias as the primary threat to consumers and lack of AI explainability as the foremost risk to their own safety and soundness.

Regulatory Frameworks Shaping AI Deployment

Following BCBS 239 principles for effective risk data aggregation and risk reporting, the Bank of England, Prudential Regulation Authority (PRA), and Financial Conduct Authority (FCA) have published crucial regulations. SS1/23 establishes Model Risk Management principles for banks, while SS1/21 addresses operational resilience and impact tolerances for important business services, mandating firms to "avoid foreseeable harm."

The FCA considers the Senior Managers' and Certification Regime (SMCR) as providing the right framework to respond quickly to innovations, including AI. All of this has led to what I'm seeing as widespread adoption of "Human-in-the-loop" architectures, where AI can propose actions, but humans explain, supervise, and authorise high-value decisions.

Why AI Tools Fail and What Foundations Make Them Work

The Trust Imperative

When I discuss AI in financial services with clients, I remind them we're not simply talking about automating decision-making or replacing human processes. We're talking about maintaining the security of the national and international financial system, meeting regulatory requirements, and assuming social responsibility. It's about trust and our lives. In this context, AI cannot fail. It cannot be merely a "black box." Would you trust it if we couldn't explain how it works?

In my experience, AI tools primarily fail due to foundational issues with the data they rely on, which can lead to biased and uninterpretable outcomes. The FCA mapped the biggest risk for consumers as "data bias and data representativeness," while for firms the greatest risk was "a lack of AI explainability"- problems stemming from poor-quality, incomplete, or non-representative training data and subsequent governance failures, and private third-party models that remove accountability from the humans and firms responsible for AI deployment.

The Non-Negotiable Foundations for Scale

To operate AI at scale, I've found that organisations must establish three critical capabilities:

Modern Data Architecture and Streaming Pipelines: Leading firms I work with have moved beyond indiscriminately dumping data into centralised "lakes," which too often degrade into ungoverned swamps. Instead, they adopt a data fabric approach: a metadata-driven layer that enables AI systems to securely access data where it resides. This ensures consistent "golden source" integrity across the enterprise, including in real-time and streaming scenarios.

Augmented Analytics and Semantic Interoperability: I've seen AI fail repeatedly when it lacks contextual understanding. Misinterpreting "settlement date" versus "trade date" across systems can undermine analytics, automation, and decision-making. A unified semantic layer establishes shared meaning across data sources and is a critical prerequisite for accurate data tracking, augmented analytics, and agentic automation at scale.

RegTech Automation and LLM Guardrails: Without high-quality, governed data, I know large language models are prone to producing inaccuracies, particularly in sensitive domains such as ledger and regulatory data, where errors are unacceptable. AI pipelines must embed compliance, control, and explainability as first-class workloads. Robust guardrails, auditability, and regulatory automation are essential to ensure trust, safety, and regulatory alignment.

Build, Buy, or Partner? Making the Right Strategic Choice

The CEO Dilemma

According to the IBM 2025 CEO Study, 64% of CEOs report that fear of falling behind is pushing them to invest in technologies before fully understanding the value they may deliver. Simultaneously, 67% believe that true differentiation depends on placing the right expertise in the right roles, supported by the right incentives. Over the next three years, 31% of the workforce will require retraining or reskilling.

The results have been mixed. Over the past three years, CEOs indicate that only 25% of AI initiatives have achieved their expected return on investment, and just 52% say their Generative AI investments are delivering value beyond cost reduction. However, I'm encouraged that optimism remains strong: 85% of CEOs expect positive ROI from scaled AI efficiency and cost-saving initiatives by 2027.

Evaluating Your Options

In my conversations with senior leaders, I help them think through their strategic approaches:

Build: When AI provides an exclusive advantage, such as a unique signal extraction or decision model, building internally allows organisations to retain full intellectual property ownership. However, I always caution that this approach requires highly skilled teams, a long-term evolution and maintenance roadmap, and dedicated client support. It is often complex, resource-intensive, and costly.

Buy: For commoditised capabilities such as analytics, KYC, and AML screening, or basic document extraction, I typically recommend purchasing third-party solutions as it's more cost-effective and enables rapid access to continuous innovation driven by the broader user community. That said, I advise leaders to carefully evaluate long-term contracts, pricing stability, and potential data or process lock-in that may limit future flexibility.

The 10–20–70 Rule

BCG recommends that executives anchor their AI adoption strategy in the 10–20–70 rule, and I wholeheartedly agree. While technology and data account for 10% and 20% of the effort, respectively, the remaining 70% depends on people and processes. Sustainable value creation will come not from technology alone, but from how effectively organisations enable their teams and redesign the way work gets done.

This is where I believe specialised partners like Keyrus play a critical role. The traditional "Build vs. Buy" discussion often overlooks the complexity of integration. I see our role as acting as the connective tissue, integrating advanced AI models and tools into legacy environments, ensuring regulatory compliance, and handling the technical heavy lifting. This allows internal IT teams, frequently constrained by operational demands, to move faster and with greater confidence.

The 2026 Differentiator: Agentic Maturity

Winners vs. Those Trapped in Pilot Purgatory

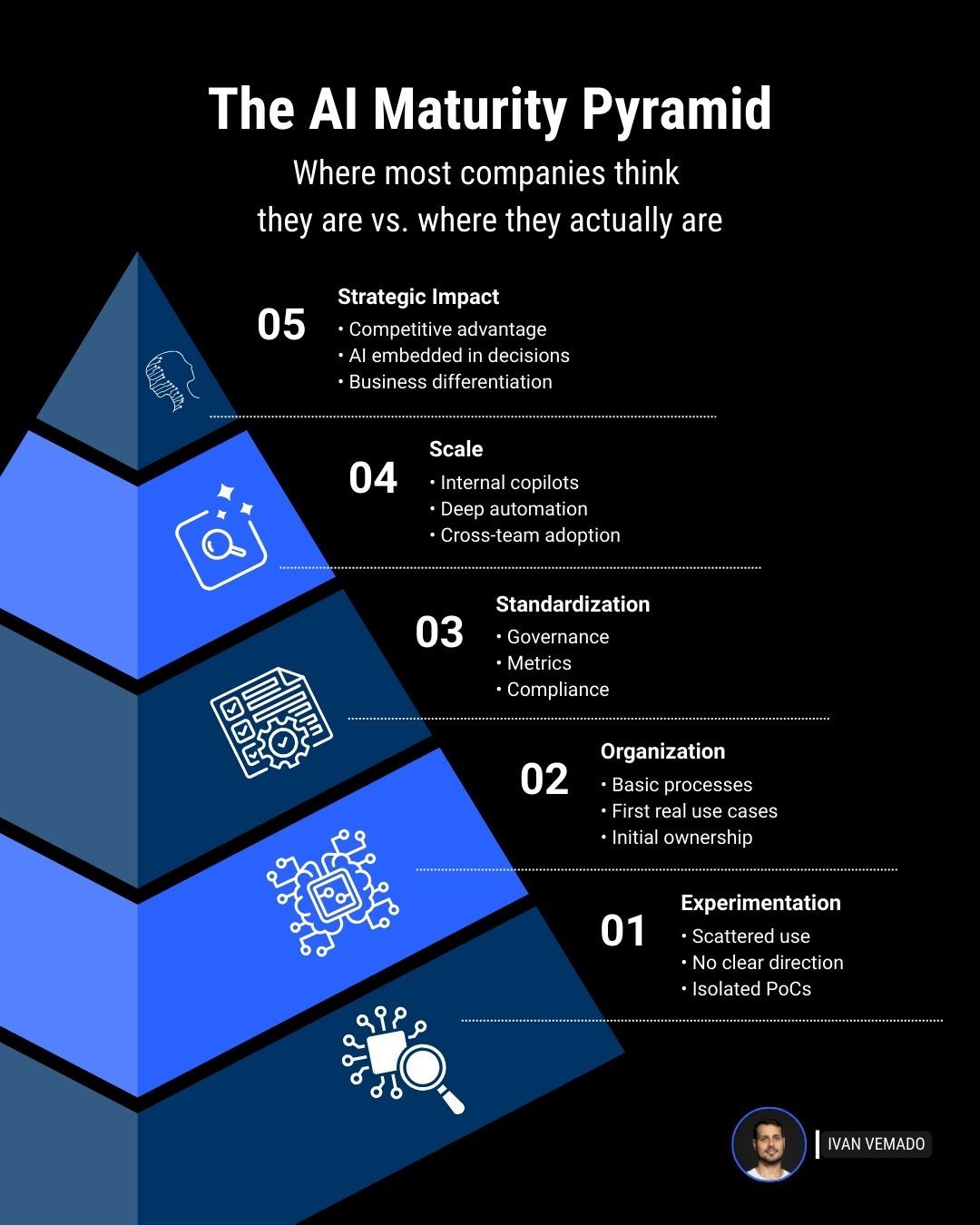

By 2026, I predict the true "winners" will be defined by their level of agentic maturity. These organisations will have moved beyond treating AI as a productivity tool and toward deploying AI as a digital workforce. They will operate an "AI Control Room" similar to a trading desk where hundreds of autonomous agents are monitored, orchestrated, and optimised. Their governance frameworks will be adaptive and outcome-driven, evolving at the pace of technological change rather than slowing progress through traditional bureaucratic processes.

Less mature organisations will remain trapped in pilot purgatory. I've seen this pattern repeatedly: they accumulate dozens of disconnected proofs of concept that fail to scale because the underlying data architecture remains fragmented and siloed. As a result, they struggle to demonstrate the robustness, traceability, and control of AI-driven risk models, leading to higher regulatory capital requirements and reduced confidence from supervisors such as the PRA.

What Senior Leaders Must Do Now

AI scaling starts at the top, and I can't emphasise this enough. The C-level needs to talk openly about what's changing and support new ways of working as teams learn and adapt. They need to recognise and reward efforts that create real, organisation-wide value, rather than isolated demos that look good but go nowhere. If something can't grow beyond a pilot, I tell my clients it's a signal to rethink the approach.

The next 12 months, in my view, are not about selecting the best AI model. They are about building a resilient, governed, and data-rich environment, one capable of supporting autonomous agents at scale and enabling sustained value creation. For asset management and custody banking, I believe the window to establish these foundations is closing. The firms that act decisively now will be the ones defining the industry standard by 2026.

About the Author

Ivan Vemado is a visionary leader in Artificial Intelligence and Innovation at Keyrus, with over 17 years of proven experience driving digital transformations and delivering innovative solutions that drive growth and efficiency across global markets.