Here's the uncomfortable truth about AI in 2026: it's not the technology that's holding organisations back. It's governance. Or rather, the lack of it. While companies have spent the past few years enthusiastically deploying generative AI tools and experimenting with machine learning models, a sobering reality is emerging.

Nearly 60% of IT leaders plan to introduce or update AI principles this year, yet only about one-third of businesses feel fully prepared to harness the potential of emerging technologies. The gap between AI ambition and AI readiness has never been wider. This isn't just an IT problem anymore. It's a business problem. And in 2026, governance is becoming the single biggest factor determining whether AI projects succeed or fail.

Why Governance Has Become the Make-or-Break Factor

Think about it: over 70% of financial institutions have now integrated advanced AI models into their business-critical applications. These systems are making decisions about credit, fraud detection, customer service, and more. The stakes are enormous. A biased algorithm, a data breach, or a non-compliant model can cost millions in fines, not to mention reputational damage.

AI governance investments are increasing even though trustworthy AI efforts are not keeping pace. Organisations are rushing to deploy AI while their oversight capabilities lag dangerously behind. It's like driving faster while your brakes get weaker.

The consequences are real. According to Publicis Sapient's 2026 Guide, AI projects rarely fail due to poor models. They fail because the data feeding them is inconsistent and fragmented. Without proper governance, even the most sophisticated AI system will produce unreliable results.

The Regulatory Wave Is Here

If internal pressures weren't enough, external regulations are tightening the screws. The EU AI Act is now in force, with rules for high-risk AI coming into effect in August 2026. Non-compliance can result in fines up to €35 million or 7% of global annual turnover, whichever is higher.

But this isn't just a European issue. Regulators are converging around a unified principle: AI risks must be managed under the same rigour as traditional model risk, but with additional focus on transparency, bias mitigation, and accountability. From NIST's AI Risk Management Framework in the United States to emerging regulations in Asia, the message is clear: get your governance house in order, or face the consequences.

Organisations selling AI in European markets must understand the EU AI Act's requirements or risk operational disruption and regulatory penalties. With compliance deadlines approaching fast, companies that haven't started their governance journey are already behind.

The Three Pillars of AI Governance That Actually Work

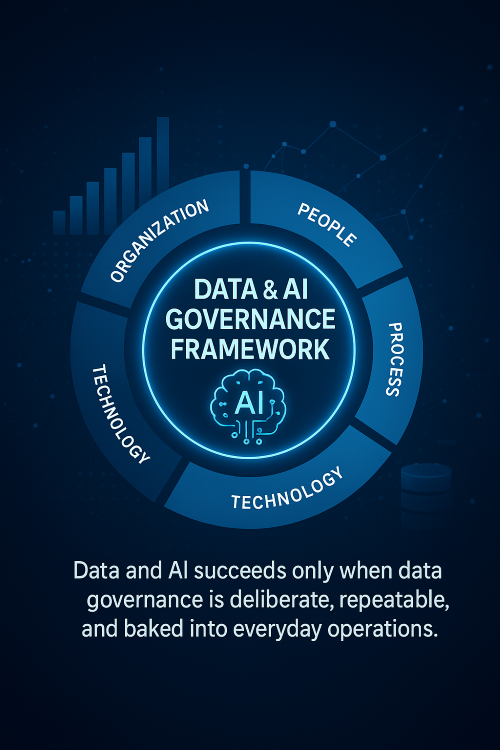

So, what does effective AI governance look like in practice? It's not about creating more bureaucracy or slowing down innovation. It's about building systems that are trustworthy, safe, and compliant from the ground up. Here are the three essential pillars:

1. Model Risk Management

Traditional model risk management frameworks are struggling to keep up with modern AI systems. As models become more dynamic and are retrained in real time with streaming data, model risk management must evolve into a continuous assurance function. This means implementing:

Explainable AI (XAI) to understand how black-box models produce their outputs

Real-time monitoring for model drift, bias, and data quality issues

Robust documentation that meets regulatory standards

Third-party oversight for vendor-supplied models

Modern model risk management must include Explainable AI to show how black-box models produce their outputs, and AI-driven tools should monitor model drift, bias, and data quality issues in real time. This isn't optional anymore. It's the foundation of responsible AI deployment.

2. Data Quality and Governance

Here's a hard truth: AI won't fail for lack of models. It will fail for lack of data discipline. Poor data quality is the silent killer of AI projects. According to Gartner, poor data quality costs companies an average of $15 million every year. In 2026, as AI moves deeper into sensitive processes like finance, healthcare, and compliance, the cost is only rising. Effective data governance requires:

Regular data audits for accuracy and completeness

Automated data quality monitoring

Clear data lineage and provenance tracking

Privacy protection and encryption measures

Cross-functional data stewardship with clear ownership

Nearly 70% of surveyed executives plan to strengthen internal data governance frameworks by 2026. The organisations that succeed will be those that treat data governance not as a compliance checkbox, but as a strategic asset.

3. MLOps and Continuous Monitoring

Deploying an AI model isn't the end of the governance story. It's just the beginning. The shift toward continuous assurance is accelerating, with AI-driven analytics now able to assess entire data populations in real time, detecting anomalies, policy deviations, or compliance lapses as they happen. MLOps provides the operational framework to ensure AI systems remain reliable, fair, and compliant throughout their lifecycle. This includes:

Automated deployment pipelines with built-in governance checks

Version control and audit trails for all model changes

Real-time performance and fairness monitoring

Automated alerts for model degradation or bias drift

Secure model serving with proper access controls

Data ingestion and preparation governance establishes controls for data quality, data balance, and privacy, creating the foundation for responsible model development. When combined with robust MLOps practices, organisations can scale AI confidently while maintaining full control and accountability.

Balancing Innovation with Oversight

One of the biggest misconceptions about AI governance is that it slows down innovation. The opposite is true. Organisations with strong governance achieve higher long-term ROI because AI becomes sustainable, scalable, and aligned with business objectives.

Think of governance as the guardrails that allow you to drive faster, not the brakes that slow you down. With proper frameworks in place, data science teams can move quickly because they know their work meets compliance standards. Business stakeholders can trust AI insights because they're backed by solid data. And executives can sleep better knowing their AI systems won't become tomorrow's headlines.

In 2026, AI governance won't just be a risk or compliance team issue; it will become a core business responsibility. The first line of defence, including product owners and data science leaders, will take a more active role in defining and maintaining governance frameworks.

Implementing Frameworks That Scale

So where do you start? Two key frameworks have emerged as industry standards: ISO 42001 provides a certifiable management system for AI governance. It's comprehensive, covering everything from organisational governance to risk management and compliance. ISO 42001 establishes the necessary steps for managing AI systems responsibly and aligning them with ethical principles, regulatory requirements, and industry standards.

NIST AI Risk Management Framework offers a more flexible, risk-based approach. NIST's AI RMF is structured around four functional components: Govern, Map, Measure, and Manage, which guide organisations through risk identification, assessment, mitigation, and governance.

The good news? You don't have to choose. Use NIST's flexible risk guidance to inform the implementation of ISO's structured, certifiable system. Together, they provide both the strategic vision and operational framework needed for comprehensive AI governance.

The Real Cost of Waiting

The cost of inaction goes beyond regulatory fines. Companies without proper governance face:

Higher risk of AI project failures

Increased vulnerability to bias and ethical issues

Greater exposure to security threats

Loss of stakeholder trust

Competitive disadvantage as governance becomes a differentiator

Almost half of surveyed IT leaders plan to increase AI-related budgets by 20% or more in 2026, with top investment areas including governance automation, AI risk tooling, and talent development. The market is moving. The question is whether you're moving with it.

Moving Forward with Confidence

Building trustworthy, safe, and governed AI systems in 2026 isn't about perfection. It's about progress. Start by:

Assessing your current state: Conduct an AI inventory and identify gaps in your governance framework

Prioritising high-risk systems: Focus governance efforts where they'll have the biggest impact

Building cross-functional teams: Bring together data science, legal, compliance, and business stakeholders

Implementing automation: Use governance platforms that can scale with your AI ambitions

Creating feedback loops: Establish continuous monitoring and improvement processes

Model risk management is evolving from a compliance checkpoint into a strategic differentiator. Firms that treat it as a dynamic, AI-enabled framework rather than a static control function will be best positioned to build resilience, trust, and competitive advantage.

The organisations that will thrive in 2026 and beyond are those that recognise a fundamental truth: AI governance isn't a constraint on innovation. It's the foundation that makes sustainable innovation possible. The time to act isn't tomorrow. It's today. Because in the world of AI governance, being reactive isn't just risky. It's expensive, disruptive, and potentially business-threatening. The companies building robust governance frameworks now are the ones that will confidently scale AI, while others are still trying to figure out the basics.

Where does your organisation stand?

Looking to strengthen your AI governance framework? Keyrus specialises in helping organisations build trustworthy AI systems through robust data governance, MLOps implementation, and comprehensive risk management strategies. Contact us to learn how we can support your AI governance journey.