In this blog, we will describe the steps which we need to follow in order to create an API interface to interact with the Snowflake data.

Table of contents:

Introduction

Architecture Design

Set up the Infrastructure / Code & Datasets

Setting up Snowflake

Set up Warehouse for concurrency

Set up Users and roles

Setting up the AWS Lambda Function

Setting up Snowflake Connector Libraries

Set up event Listener in the Lambda function

Terraform code to set up AWS Lambda function

Setting up API Gateway to call the Lambda function

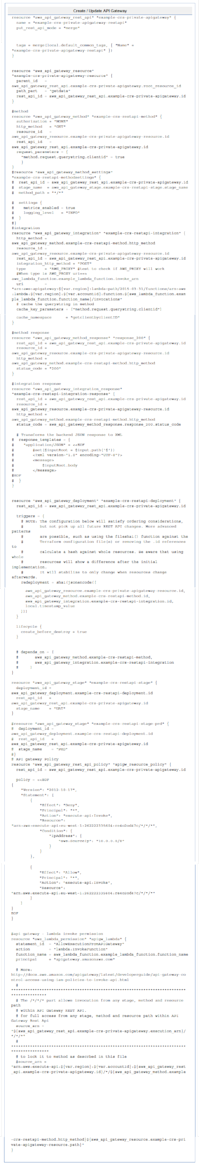

Terraform code to create API Gateway

Introduction

Here we will see, how we can fetch the data in Snowflake from an external system using GET method through API Gateway and AWS Lambda functions. However, we can follow the same principle to implement other methods like POST / PUT to insert or update the target data set.

Some of the primary advantages of using this architecture are listed below :

Less maintenance cost The assets that have been used to build this platform are managed by the providers (AWS and Snowflake) and we do not need to spend extra man-hours to ensure these are up and running. In case of any issue, we can raise an incident with the providers and it will be attended within the agreed upon SLA.

Serverless Architecture, hence scalable The components being used can be tuned for various degrees of performance and can be adjusted in the run time without downtime. For example, the snowflake warehouse which will be used to query the data can be configured for parallel processing by adding clusters and the AWS Lambda is already configured to cater ~1000 requests concurrently by default. In case we need to increase the concurrency threshold, we can raise a request with the AWS account manager and it can be added with extra cost.

Ease of adding new features and functionalities We can extend the features and functionalities of the implemented resource and methods by adding new views and models to the existing Lambda function without the need to bring the system down. The request which is made through the API Gateway can send additional query parameters without any code change. We just need to add event handlers in AWS Lambda to use these parameters in the subsequent calls.

Ability to track data consumption and usage patterns Based on the user and the query parameters, we can juggle between which Warehouse should be used to fetch data from Snowflake and thus we can set up notifications and limit the usage of each user. In addition to this, we can also find which user has executed the queries and how many credits have been consumed as a result. So, the billing and invoicing process can be made transparent.

Architecture Design

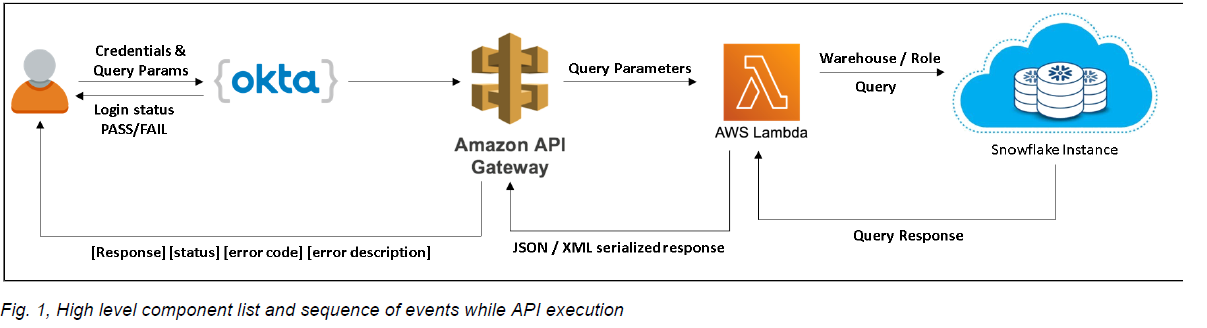

Listed below are the main components which are used to deliver the capability of data sharing using APIs from Snowflake and it also illustrates the stages of the execution and how the data is returned from Snowflake instance to the end user.

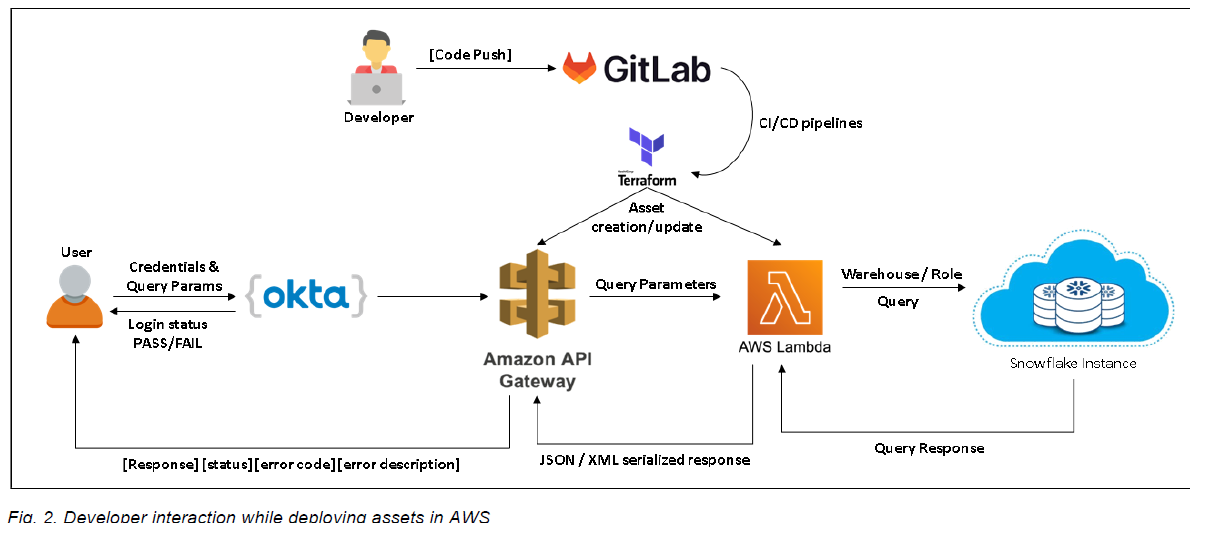

After we have established how the API execution will happen, we need to build a framework on how the developer / development team will interact with the above architecture to add features and functionalities. The below diagram is an attempt to showcase the same.

Set up the Infrastructure / Code & Datasets

✓ Setting up Snowflake

Before we start to set-up the AWS components, we need to ensure that the data which we are looking forward to share with users / third party applications is available in Snowflake and we are able to query the data set using the parameters which we are supposed to send via API Gateway.

Set up Warehouse for concurrency

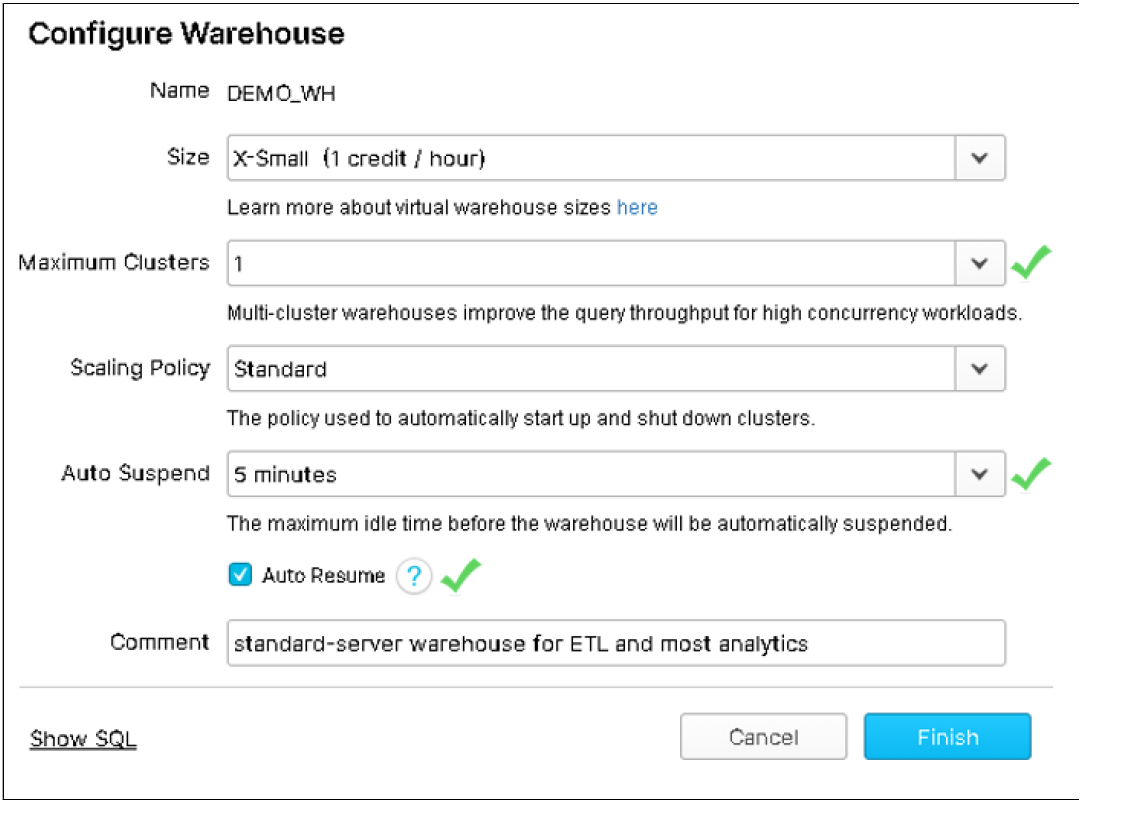

Generally, we dedicate a specific warehouse in a snowflake account which will be responsible for fetching the data when an API call is made. This is done so that we can track the API operations and limit them if necessary plus it is easy to finetune the warehouse parameters such as MAX_CONCURRENCY_LEVEL, SCALING POLICY, Auto Suspend etc. If we are writing a GET method, then we would want our warehouse to scale out automatically to a certain limit (8 by default but it can be increased) when the demand rises and when the demand stops or reduces, the warehouse should auto suspend or reduce the number of clusters being used.

Set up Users and roles While we are creating a Lambda function which will interact with snowflake, we usually create a service account using which the code will interact with the data assets in Snowflake. The user credentials and roles can be stored in the AWS secrets manager from where they can be fetched at run time by the Lambda function.

✓ Setting up the AWS Lambda Function

We have to choose the language using which we are going to create our Lambda function before we start to develop the same. This enables the AWS environment to run our code in the chosen run time environment. For this use case we have used Python 3.8.

Set up Snowflake Connector Libraries This is one of the most important steps before we start our coding. It is important to note that the snowflake connector binaries are not the same for the different Operating Systems. If we use the same snowflake libraries which we used to develop and test our python code in Windows, the same will never run when we push the dependencies and run the Lambda function. The AWS Lambda function runs on a Linux platform and hence it requires the snowflake connector library for Linux.

There are several ways to get these libraries, we can use a Windows Subsystem for Linux / Use a Linux machine altogether to code. However, the easiest and most effective way to ensure that the library is compatible with the AWS Lambda platform is to download the libraries through a simple ec2 instance.

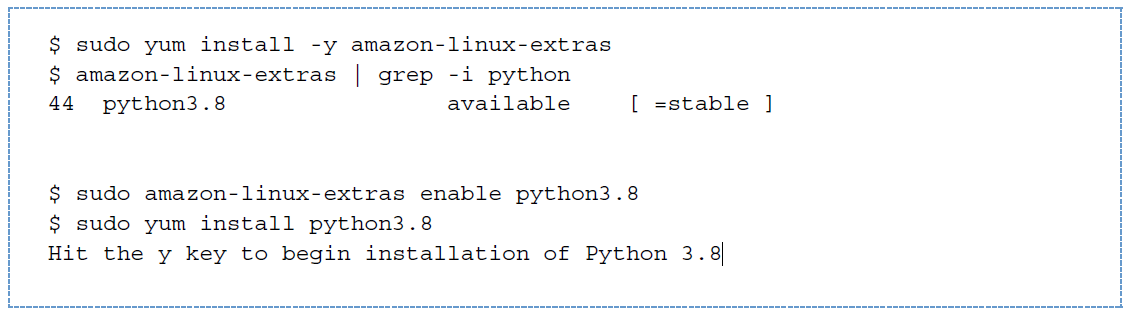

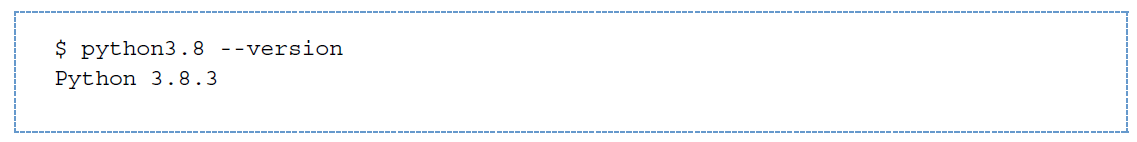

Once the ec2 instance is up and running, please login to the same and install the python 3.8 version (by default ec2 has python 2.7) because we are using the same platform for running our AWS Lambda function.

Check if the installation is successful by running the below command

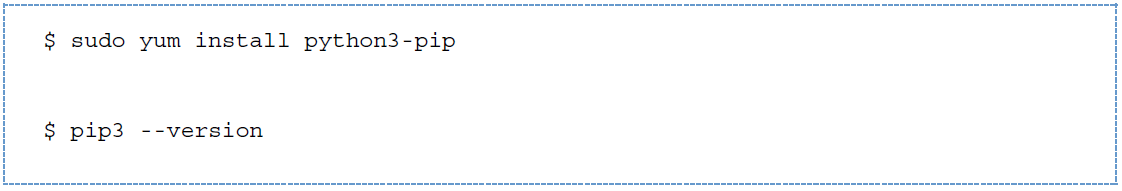

Once python 3.8 is installed, we now need to install pip and check the version of the same

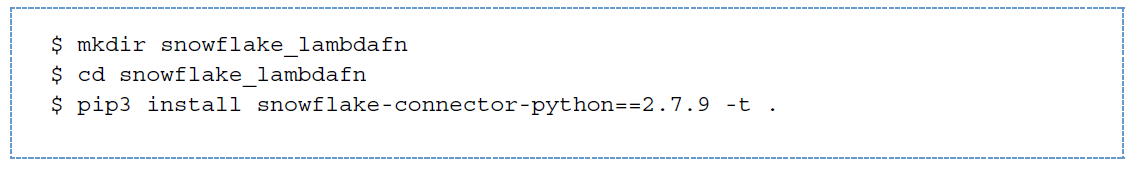

Upon installing pip, we need to create a directory where we would download the snowflake connector library

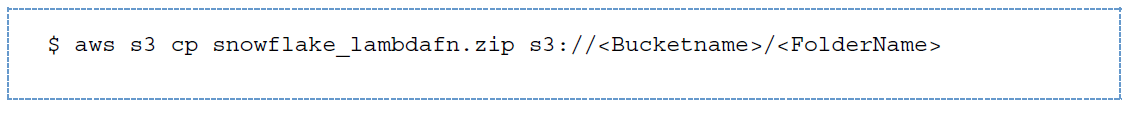

When the snowflake connector is downloaded, we need to zip the directory contents so that the lambda_function.py is present in the root. After the files are compressed, we need to either download them in local or upload the zip file to an s3 location by running the below command

Next step is to either send the zip file directly to AWS lambda by providing the s3 path of the zip file or download the file in local and push the libraries in git repository.

**It is important to note that still we may be getting "module not available" error if pushed to git because a few files miss out to be pushed in remote repository if the __init__.py is blank in the same folder. In case this happens, we have to carefully upload those directories and files manually.

Set up event Listener in the Lambda function

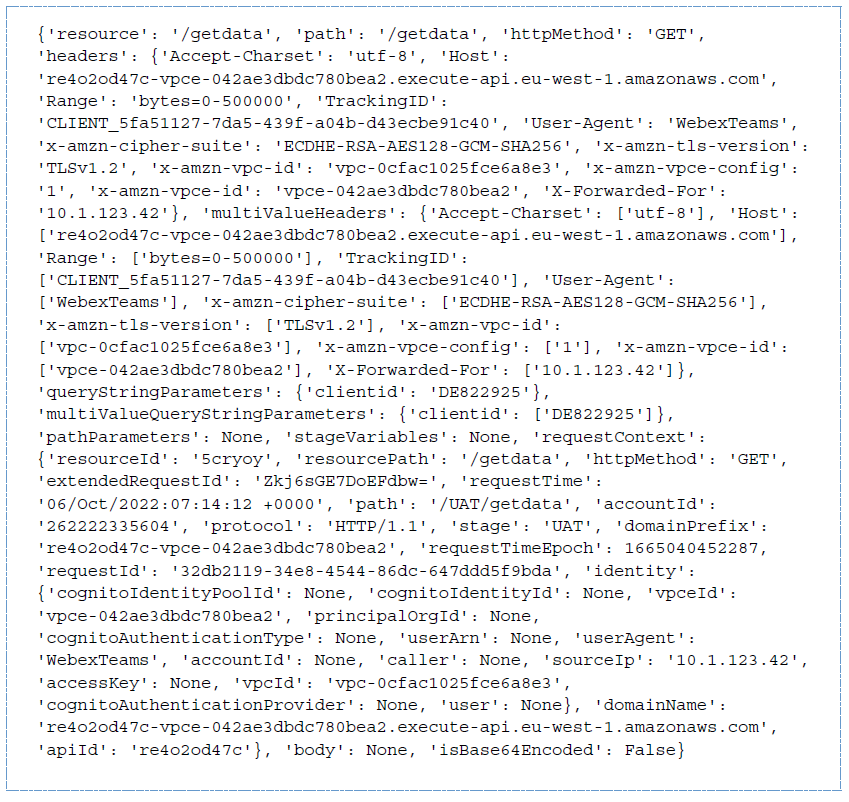

Before we set up the event listener in the Lambda function, we need to understand how an event looks like when called upon from an API gateway. A typical event looks like:

If we closely see the above piece of information, we can see that the query parameter and value is passed in a key : value format under the 'queryStringParameters' tag. So, we will write the below code in our lambda function to refer the incoming value(s) :

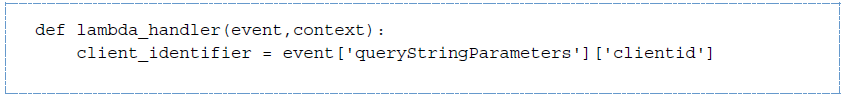

Terraform code to set up AWS Lambda function

✓ Setting up API Gateway to call the Lambda function

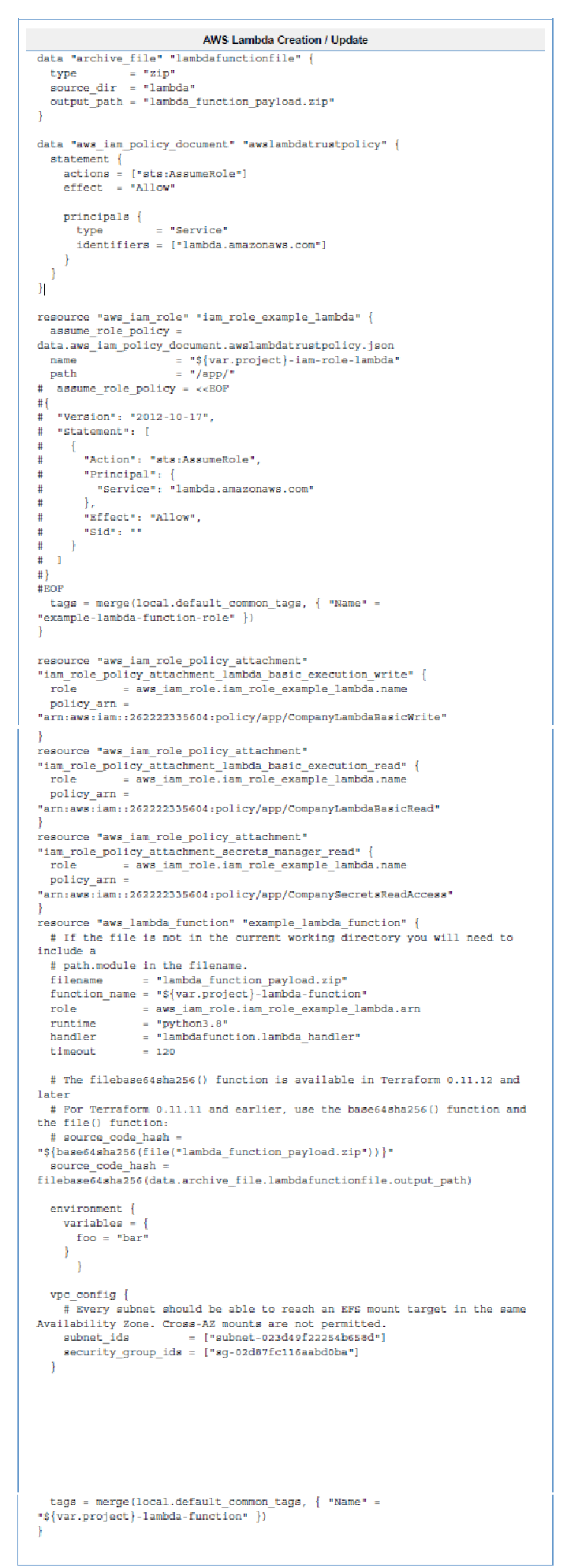

In order to set up the API Gateway, we first have to decide which protocol we are going to use. For the current use case, we are using REST. While creating the API Gateway, first we have to create a resource and we can create multiple methods under that resource. For fetching data from Snowflake, we will be using the GET method.

The most important aspect while configuring an API Gateway which needs to pass event parameters to a Lambda function, we need to check the below option:

If we do not check the option of "Use Lambda Proxy integration", the event which is sent to the Lambda function is blank and the query string key and value will not be passed which in turn will result in an error.

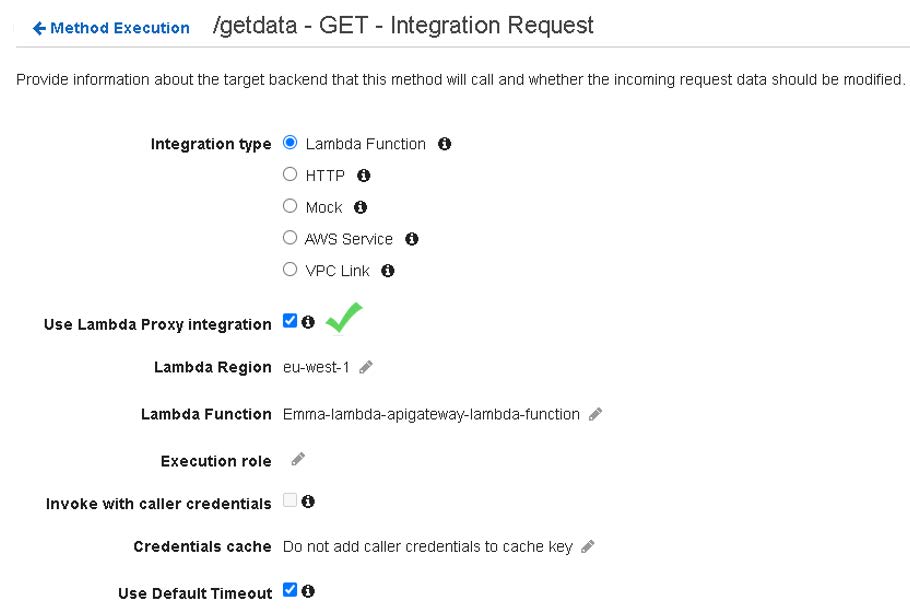

Terraform code to create API Gateway

In this comprehensive guide, we explored the steps to create an API interface for interacting with Snowflake data using AWS Lambda and API Gateway. We learned how to set up the infrastructure, configure Snowflake, create AWS Lambda functions, and establish API Gateway connections. This architecture offers cost-efficiency, scalability, and flexibility for data sharing while ensuring ease of maintenance and robust tracking capabilities. By following these steps, you can harness the power of Snowflake data sharing via APIs to meet your organization's unique needs.