Here's a number that keeps enterprise leaders awake at night: 80-90% of business data is unstructured, growing at 55-65% annually. Global unstructured data volumes have now reached 221 zettabytes and continue accelerating. Yet 95% of businesses recognise managing this data as a significant problem, and 71% struggle with effective protection and governance.

The challenge isn't just volume. Invoices, contracts, purchase orders, claims documents, financial statements, and compliance reports arrive in hundreds of formats across dozens of systems. Generic document AI tools promise automation but collapse under the weight of enterprise complexity, regulatory requirements, and the need for auditability. This is where Azure AI Foundry transforms from a platform into a strategic advantage, building governed, scalable intelligent document processing systems that turn unstructured chaos into competitive intelligence.

The $109 Billion Problem: Why Most Document AI Projects Never Scale

The intelligent document processing market is projected to reach $109.1 billion by 2033. But here's the uncomfortable truth: most IDP implementations fail to scale beyond proof-of-concept. The numbers reveal why. 74% of enterprises now store more than 5 petabytes of unstructured data: a 57% increase over 2024. That's 5 trillion pages of documents. Traditional approaches that require manual configuration for each document type simply cannot handle this scale.

But scale is only half the problem. Despite 90% of enterprises using AI in daily operations, only 18% have fully implemented governance frameworks. This disconnect is dangerous. The EU AI Act, effective since August 2024, classifies document processing systems as high-risk AI requiring comprehensive audit trails, version control, and explainability. Penalties reach €35 million or 7% of global turnover, with 62% of IT leaders now citing reducing data risk from AI as their top business challenge.

Organisations need more than accurate extraction. They need provable, auditable, version-controlled AI document processing for enterprises that meets regulatory standards from day one.

Azure AI Foundry: Built for Production, Not Just Pilots

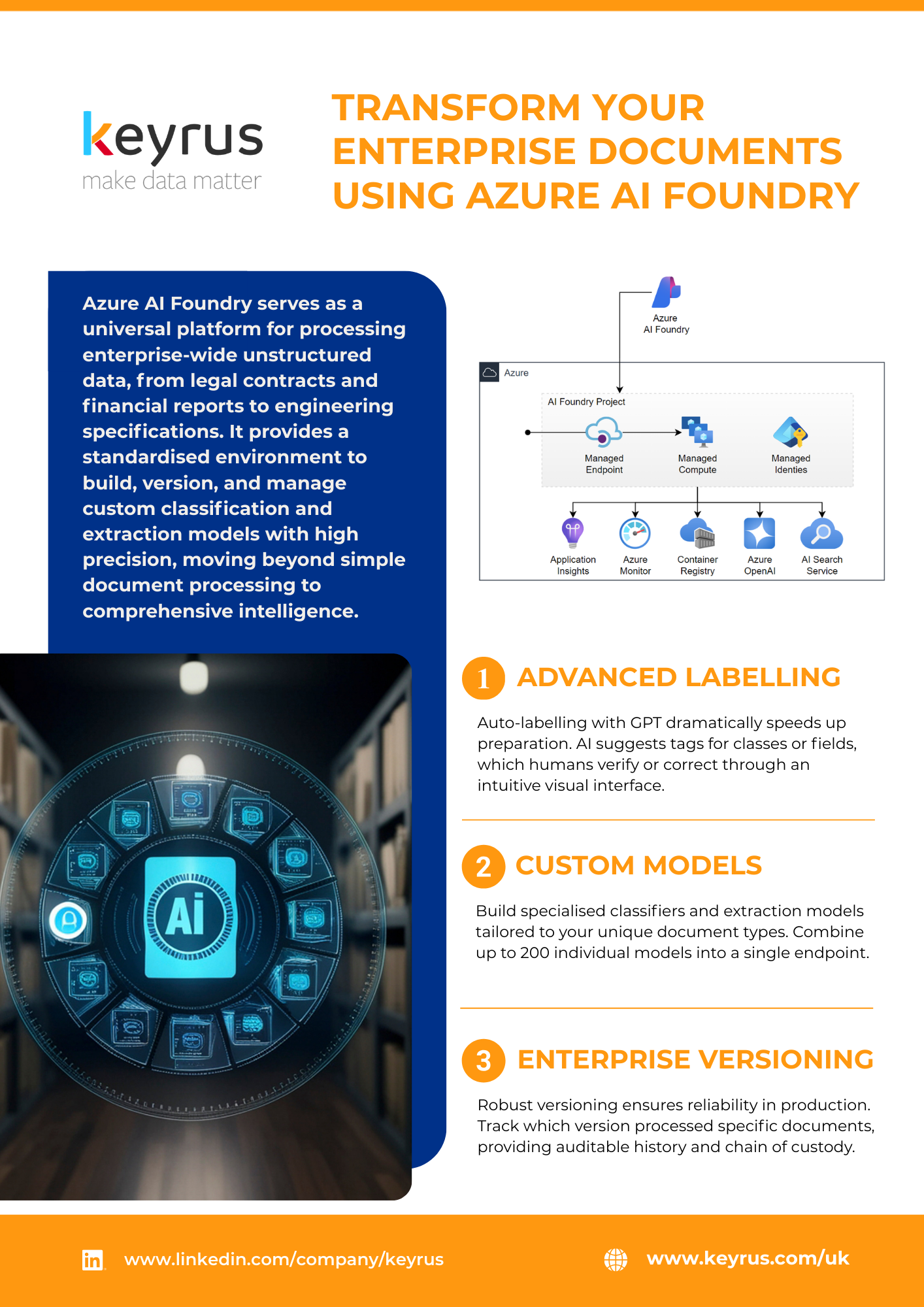

Azure AI Foundry addresses these challenges through an architecture designed for governed, production-grade AI. The platform's adoption tells the story: 80% of Fortune 500 companies now use Azure AI Foundry, processing 50 trillion tokens monthly across 230,000 organisations. This isn't theoretical capability but production scale.

At the heart sits Azure Content Understanding, a multimodal service that extends beyond traditional OCR. It handles handwritten text, low-quality scans, varied fonts, and complex layouts while processing documents, images, audio, and video through a unified interface. The service leverages deployed GPT models for complex field extraction and relationship mapping that traditional OCR cannot achieve. Pre-built models for invoices, receipts, health insurance cards, and tax forms work immediately without training.

The platform operates across 60+ regions in 140 countries with enterprise-grade security and compliance certifications. Organisations achieve an average ROI of $3.70 for every dollar invested in Azure AI solutions, with productivity gains of 15-80%. This is enterprise document AI at scale.

GPT Auto-Labelling: From Weeks to Hours

One of the most transformative Azure AI Foundry use cases is GPT-assisted auto-labelling, which fundamentally changes the economics of custom document processing. Building custom extraction models traditionally requires manual labelling of hundreds or thousands of document examples, which results in weeks or months of data science effort. For enterprises with dozens of proprietary document types, this approach is prohibitively expensive and slow.

GPT auto-labelling enables a radically different approach. With GPT-5 models now powering Azure AI Foundry Agent Service, organisations can deploy the flagship GPT-5 with its 272k-token context window for deep document analysis, or GPT-5-mini for fast, efficient processing. These models analyse representative documents and automatically generate labelled datasets, identifying fields, relationships, and patterns across document variations without manual annotation. Rather than labelling from scratch, analysts review and correct AI-generated labels, reducing effort by 70-80% while ensuring accuracy. The system learns from corrections, continuously improving extraction quality.

With labelled data in place, custom neural models train on up to 2GB of data (10,000 pages) in days rather than months. As new document variations emerge, the system identifies low-confidence extractions for human review, incorporating feedback to maintain accuracy over time. A global financial services firm used this approach to automate the processing of 47 different internal document types. Traditional timeline: 8-12 months. With GPT auto-labelling, six weeks from kick-off to production deployment.

Combining 200+ Models into One Endpoint

AI model versioning and governance become exponentially complex as organisations scale. A typical enterprise might deploy specialised models for invoices, contracts, and claims, region-specific models for different languages, industry-specific extractors, custom models for proprietary documents, and different model versions across business units. Managing this complexity traditionally requires extensive infrastructure and DevOps resources.

Azure AI Foundry solves this through intelligent model orchestration. All models—whether pre-built Document Intelligence extractors, custom neural models, or GPT-powered processors- are accessible through a unified API. Applications don't need to know which model handles which document; the platform routes intelligently. Custom classification models analyse incoming documents and route them to the appropriate extractor. A single submission endpoint can handle invoices, contracts, purchase orders, and claims, each processed by specialized models optimized for that document type.

Version management happens at scale. Deploy multiple versions of the same model simultaneously, route traffic gradually from v1 to v2 for A/B testing, with automatic fallback if new versions underperform. All versioning is tracked with complete audit trails. Every deployed model includes comprehensive metadata: training data characteristics, performance metrics across document types, known limitations, responsible AI assessments for bias and fairness, and version history with change documentation.

This metadata powers model governance dashboards that show exactly which models are deployed where, how they're performing, and whether they meet compliance requirements.

Why Versioning and Traceability Are Now Legal Requirements

The regulatory landscape has shifted from aspirational principles to enforceable requirements with significant penalties. Article 96 of the EU AI Act establishes an evidence standard requiring that all logs are machine-readable and timestamped, all assessments are version-controlled, approval trails map to regulatory requirements, and updates are continuously tracked. Simply claiming "we have governance" is insufficient. Regulators demand continuous, real-time proof of operational controls.

Consider a scenario where a document processing system makes an error that impacts a financial decision or legal proceeding. Regulators and auditors will demand answers: Which model version processed this document? What training data was used? Were there human review checkpoints? How was the model validated before deployment? What quality assurance processes detected or missed the error? Without comprehensive versioning and traceability, organisations cannot answer these questions, exposing them to regulatory action, legal liability, and reputational damage.

Azure AI Foundry provides built-in capabilities for regulatory compliance. Every API call, model invocation, and processing operation is logged with user identity, timestamp, geographic location, model version and configuration, and confidence scores. Model versions, once deployed, cannot be altered. Historical versions remain accessible for reproduction and investigation. Built-in compliance frameworks map Azure AI capabilities to specific regulatory requirements: EU AI Act high-risk system obligations, GDPR data processing, and industry-specific regulations.

Human oversight controls allow mandatory human review for low-confidence extractions, specific high-risk document types, processing of sensitive personal information, and decisions with significant financial or legal impact.

From Pilots to Production: Why Most Fail and How to Succeed

The intelligent document processing graveyard is littered with successful pilots that never scaled. 70% of organisations are piloting business process automation, but 90% intend to scale enterprise-wide in 2-3 years: a gap that reveals the "valley of death" between pilot and production.

Pilots often use quick-and-dirty approaches: manual data pipelines, hardcoded business logic, minimal error handling. These shortcuts accelerate proof-of-concept but create technical debt that makes production deployment prohibitively expensive. A pilot might process one invoice format from one vendor. Production means handling invoices from 500+ vendors across 30 countries in 15 languages, each with format variations. The "multiply by reality" challenge kills most scaling efforts.

Organisations leveraging Azure AI Foundry for production deployments report fundamentally different outcomes. The platform provides a unified development lifecycle from data preparation and labelling through model training, deployment, monitoring, and version control. Development practices from pilot automatically carry into production and no architectural rewrites are required. Pre-built connectors to Azure Storage, SharePoint, Power Automate, Logic Apps, and Power BI enable enterprise integration out-of-the-box.

Processing scales automatically from hundreds to millions of documents monthly through serverless compute, parallel processing across global infrastructure, intelligent caching, and built-in cost controls. Real-time performance metrics, automated alerting for quality degradation, cost tracking, and security monitoring provide production-grade observability.

The Keyrus Advantage: From Technology to Business Outcomes

Technology platforms provide capabilities, but successful enterprise AI deployments require expertise in translating business requirements into technical architecture, navigating organisational change, and building systems that deliver sustainable value. This is where Keyrus transforms Azure AI Foundry use cases from theoretical possibilities into operational reality.

We begin every engagement by understanding what business processes are constrained by manual document handling, what decisions depend on information trapped in unstructured documents, where errors or delays create risk, and what measurements define success from the business perspective. Our architects then design Azure AI solutions that directly address these outcomes with clear KPIs and measurable ROI from day one.

Even pilot implementations use production-grade patterns: proper error handling, comprehensive logging, version control, and automated testing. This eliminates technical debt and enables rapid scaling. Rather than waiting for "complete" automation, we deploy in phases, first the highest-value document types, then expanding coverage. Each phase delivers measurable business value while building momentum for broader adoption.

Regulatory compliance isn't an afterthought; it's a design constraint from the beginning. We help organisations understand exactly which AI regulations apply to their use cases, what evidence regulators will demand, and how to structure Azure AI implementations for compliance. Our solutions implement comprehensive governance spanning model development and validation procedures, deployment approval processes, continuous monitoring and drift detection, incident response protocols, and audit documentation.

Real-World Impact: Azure AI Foundry in Action

A global insurance company faced the manual processing of 2.4 million claims documents annually across 15 document types, with an 8-day average processing time and a 12% error rate. Azure AI Foundry implementation with custom classification routing, GPT-powered auto-labelling for proprietary forms, human review workflows for high-value claims, and full audit trails delivered dramatic results—processing time reduced from 8 days to 18 hours: a 94% reduction. Error rate decreased from 12% to 1.8%. The company achieved $4.2M annual cost savings through labour efficiency with 100% audit trail compliance and ROI in seven months.

A multinational bank struggled with customer onboarding delayed by manual review of identity documents, financial statements, and regulatory forms across 40 countries in 25 languages. Azure AI Foundry deployment with multi-language OCR, 200+ specialised models unified through a single endpoint, and geographic routing for data residency compliance transformed their operations. Onboarding time reduced from 5 days to 4 hours, a 95% reduction. Compliance documentation costs fell 60%, customer satisfaction scores improved 34%, and the bank achieved full GDPR and regional banking regulation compliance.

The 2026 Imperative: Build Infrastructure, Not Point Solutions

In 2026, the convergence of explosive data growth, regulatory enforcement, and AI adoption creates an unavoidable imperative: organisations must build governed, scalable document AI infrastructure and not just deploy point solutions. 85% of organisations will increase data storage spending in 2026 despite cost pressures. 62% cite AI data management as their top skills gap: up from 43% in 2024.

Organisations that treat document AI as an IT project will struggle. Those that recognise it as foundational infrastructure—as critical as ERP or CRM—and invest accordingly will thrive. Azure AI Foundry provides the platform for this transformation, combining industry-leading extraction accuracy, rapid deployment via GPT-powered auto-labelling, enterprise scalability through unified model orchestration, regulatory compliance via comprehensive versioning and audit trails, and cost efficiency through intelligent resource optimization.

The organisations winning in 2026 and beyond won't be those with the most documented AI pilots. There will be those with production systems processing millions of documents monthly, continuously learning and improving, fully auditable and compliant, and delivering measurable business outcomes. The infrastructure for this future is available today.

Explore How Keyrus Helps Enterprises Deploy Governed AI on Azure

Ready to transform unstructured document chaos into enterprise intelligence? Keyrus combines deep Azure AI expertise with proven implementation methodologies to deliver production deployments in weeks rather than months, an architecture designed for millions of documents monthly, built-in governance meeting EU AI Act requirements, measurable ROI with typical 6–12-month payback periods, and future-proof infrastructure that evolves with your needs.

Contact Keyrus today to schedule an Azure AI Foundry strategy consultation. Let's build intelligent document processing that delivers business value while meeting the highest governance standards.

About Keyrus: Keyrus is a global consulting firm specialising in data intelligence and digital transformation. With deep expertise in Microsoft Azure and AI technologies, we help enterprises harness cloud-native AI platforms to drive business value while maintaining operational excellence and regulatory compliance.