Since its introduction in the 1970s, SQL has been the overwhelmingly dominant language for processing information in relational databases, and the ability to use it is ubiquitous across practically all data practitioners. Today, with the dawn of Large Language Models, could natural language take over as our preferred means of querying data?

This is surely the holy grail of analytics.

No longer would we require data analysts to translate business stakeholder questions into SQL queries. Instead, questions are directed to the LLM which in turn, using Generative AI and a knowledge of our database schema, composes the necessary query to retrieve the required information. Can dashboards and reports finally be replaced by a simple Text-to-SQL chatbot?

In this blog, we'll uncover how Language Models like GPT-4o coupled with LangChain can transform the way we interact with databases and provide a glimpse into the future of business intelligence (BI) and Analytics.

Existing Analytics Tools

Before we begin, we should note that this idea is not new. In fact, it is the fundamental concept behind the AI-powered analytics company ThoughtSpot, who have been working on natural language queries of data since before the LLM boom.

It is also a concept that is now embedded in many user-facing visualisation platforms, such as Insight Advisor in QlikSense, Tableau Pulse, Alteryx's AiDIN, Microsoft Copilot for Power BI and Snowflake's Copilot.

These are all fantastic tools that are certain to grow in maturity in time, however most exist as extensions to BI tools, with ready made datasets. In contrast, this blog focusses on directly querying a relational database with Python - and the most popular library for achieving this is undoubtedly LangChain.

Python and LangChain

LangChain is an open-source library in both Python and Javascript. It is a general purpose tool for interacting programmatically with Large Language Models - either publicly available ones such as those of OpenAI, or your own.

In their own words, LangChain "helps connect LLMs to your company’s private sources of data and APIs to create context-aware, reasoning applications".

In our example, we'll be using LangChain to connect to a very simple SQLite database, and use OpenAI GPT models to query it. The database is Microsoft's well-known Northwind database, which contains fictitious sales data.

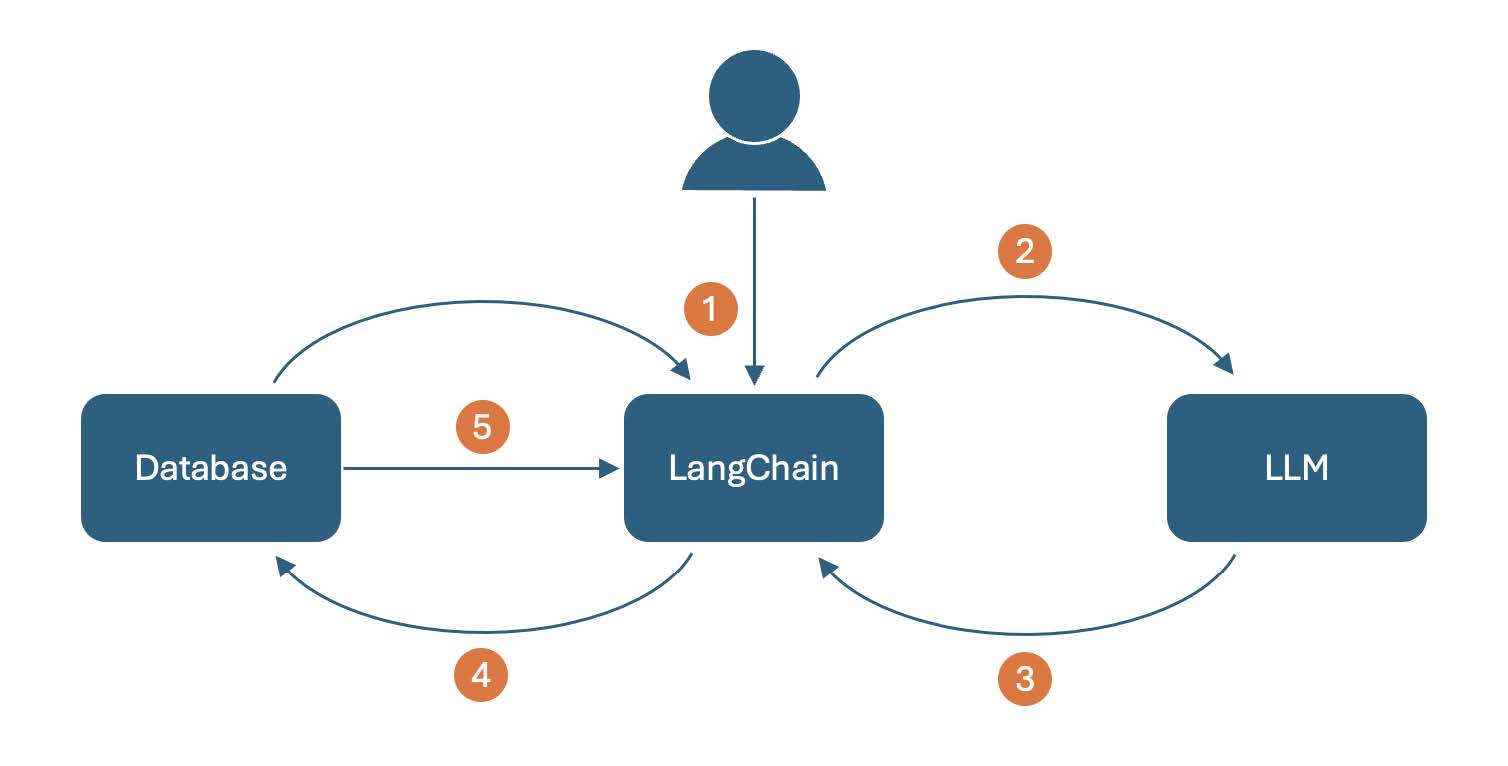

The figure above summarise the process in five steps:

1. LangChain collects the User's question and the database schema information (table and column names) 2. The information is fed to a LLM, along with a written command to create a SQL query 3. The LLM generates a SQL query and responds 4. LangChain connects to the database and executes the query 5. The database returns a result

Getting Started

Getting started is very easy thanks to LangChain's "SQL Agent" function. Simply point it to a database and specify your LLM of choice and start asking it questions. We'll step through the code to do this below. 1. Import three key functions from the Langchain library:

from langchain_community.utilities.sql_database import SQLDatabase from langchain_community.agent_toolkits import create_sql_agent from langchain_openai import ChatOpenAI

2. Load the database using the Langchain SQLDatabase function

db = SQLDatabase.from_uri("sqlite:///northwind.db")

3. Add your OpenAI API Key to environment variables. This can be found in your account settings at openai.com

%env OPENAI_API_KEY=<your_key_here>

4. Specify your LLM using the ChatOpenAI method

llm_35t = ChatOpenAI(model="gpt-3.5-turbo", temperature=0) llm_4o = ChatOpenAI(model="gpt-4o", temperature=0)

5. Create your SQL Agent

agent_executor_35t = create_sql_agent(llm_35t, db=db, agent_type="openai-tools", verbose=True) agent_executor_4o = create_sql_agent(llm_4o, db=db, agent_type="openai-tools", verbose=True)

6. Ask a question

agent_executor_35t.invoke("Which country's customers spent the most?")

It's as simple as that. In our case we have implemented two agents, one using GPT3.5 Turbo and a second using the more recent GPT 4o. In the next section, we'll take a look at the answer given for the question above and throw some tougher questions in the mix.

Agent Responses

Q1. Which country's customers spent the most?

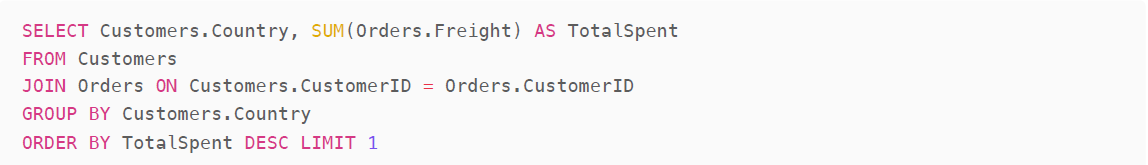

GPT 3.5 Turbo Agent Response:

"Customers from the USA spent the most, with a total amount of $566,798.25."

Agent SQL Query:

GPT 4o

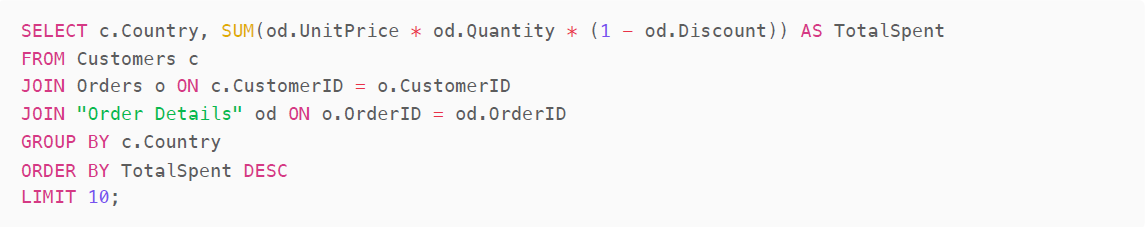

Agent Response:

"The country whose customers spent the most is the USA, with a total spending of $62,601,564.84"

Agent SQL Query:

Already we see a big difference between the response given by GPT 3.5 Turbo and the newer GPT 4o. The former picks out the Freight cost column only and ignores UnitPrice, but does identify the Customers and Orders tables and formats a valid query complete with JOIN, GROUP BY and ORDER BY clauses.

Using GPT 4o, the Order Details table is also included and the LLM correctly defines a TotalSpent value by taking into account the unit price, quantity and even discount applied. It also makes use of table aliases for improved readability (which 3.5 Turbo did not do). The Top 10 countries are returned by the query and included in the answer. This wasn't requested but is arguably useful information.

In summary, GPT 4o provides an answer similar to what one would expect from a data analyst. A more thorough answer might have also included the Freight cost that GPT 3.5t calculated, however it isn't clear if this is a cost incurred by the customer. This is a simple example that shows how important it is to ask a clear unambiguous question when using these Text-to-SQL generators.

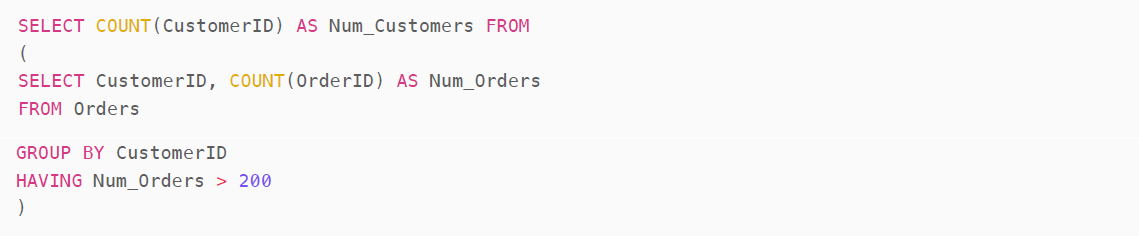

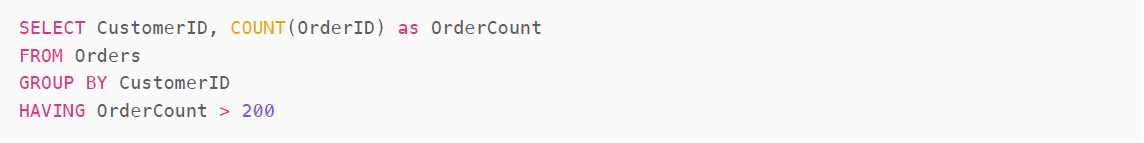

Q2. How many customers have made more than 200 orders?

GPT 3.5 Turbo

Agent Response:

"There are 4 customers who made more than 200 orders."

Agent SQL Query:

GPT 4o Agent Response:

"There are 4 customers who made more than 200 orders. They are...."

Agent SQL Query:

Both GPTs give the correct response to this question, however with 4o we are once again provided by additional information - in this case the names of the 4 customers. The queries used reflect this difference, the query using 3.5T returns a single number, four, whereas the query using 4o returns the four rows and it correctly provides the answer based on the row count of the query result.

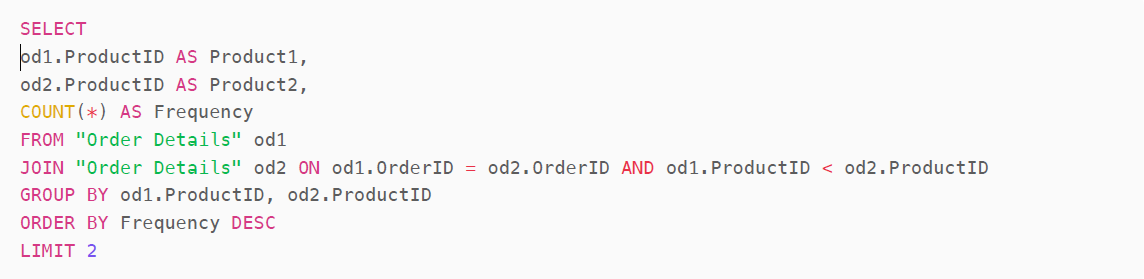

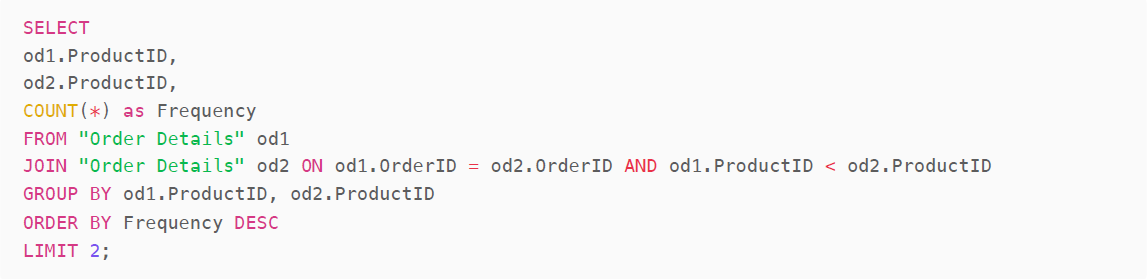

Q3. Which two products are most frequently bought together?

This question gets a little bit deeper and requires multiple joins of the same table, Order Details.

GPT 3.5 Turbo

Agent Response:

"The two products that are most frequently bought together are ProductID 20 and ProductID 66, with a frequency of 5445 times. The next most frequently bought together are ProductID 22 and ProductID 66, with a frequency of 5442 times."

Agent SQL Query:

GPT 4o

Agent Response:

"The two products that are most frequently bought together have the following ProductIDs:

1. ProductID 66 and ProductID 5445 2. ProductID 66 and ProductID 5442

To get the names of these products, I can query the Products table."

followed by "The two products that are most frequently bought together are:

1. Louisiana Hot Spiced Okra (ProductID 66) and ProductID 5445 2. Louisiana Hot Spiced Okra (ProductID 66) and ProductID 5442

It seems that ProductIDs 5445 and 5442 do not exist in the Products table. Therefore, I can only provide the name for ProductID 66".

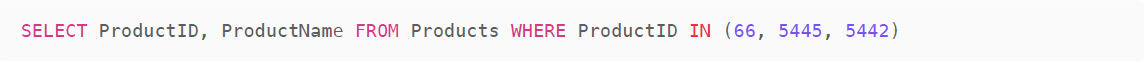

Agent SQL Query:

followed by

In this example 3.5T provides the correct answer but 4o makes a mistake, despite them both generating the same initial query. The latter confuses the frequency column as a Product ID and tries (and fails) to find the associated product name. 3.5T doesn't go the extra step of finding the Product Name for us, another example of how it provides a more straightforward answer.

Q4. What is the monthly average customer count across all months??

This question mimics a typical business KPI.

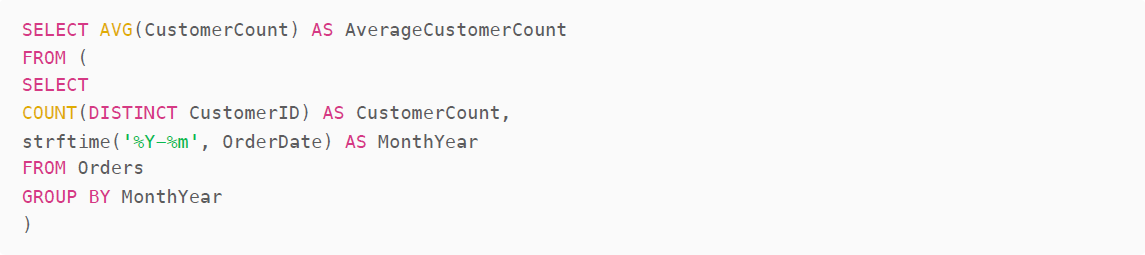

GPT 3.5 Turbo

Agent Response: "The monthly average customer count across all months is approximately 67.04 customers."

Agent SQL Query:

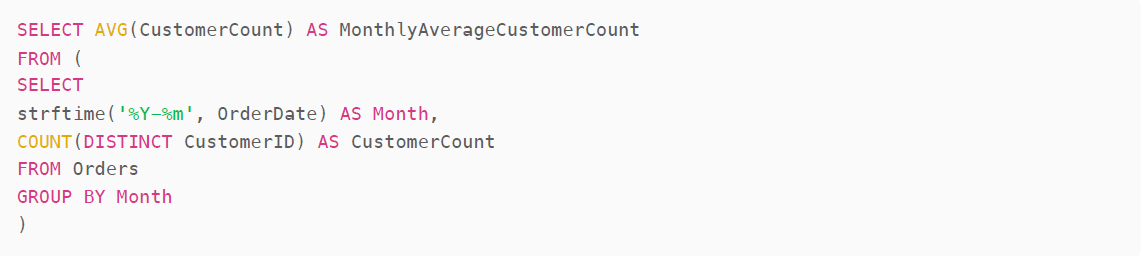

GPT 4o

Agent Response:

"The monthly average customer count across all months is approximately 67"

Agent SQL Query:

Both models use the same query to get the correct answer to the question. Again, we see that 4o generates an answer that better fits the context - as we are unlikely to be concerned by the decimal places when discussing a discrete quantity like Customers. However, some would argue that 3.5T's response is preferable as it is accurate and doesn't attempt to simplify the answer for us.

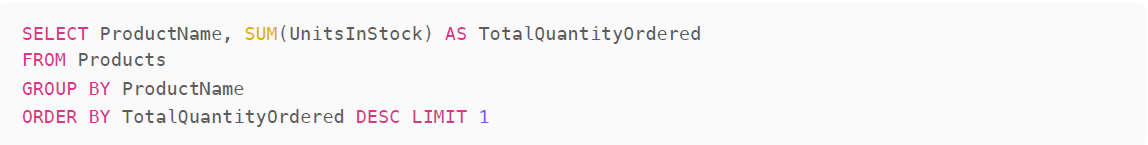

Q5. What is the top product by quantity ordered?

In this question, we are testing the model's ability to identify the appropriate column for summarisation.

GPT 3.5 Turbo

Agent Response:

"The top product by quantity ordered is "Rhönbräu Klosterbier" with a total quantity ordered of 125.."

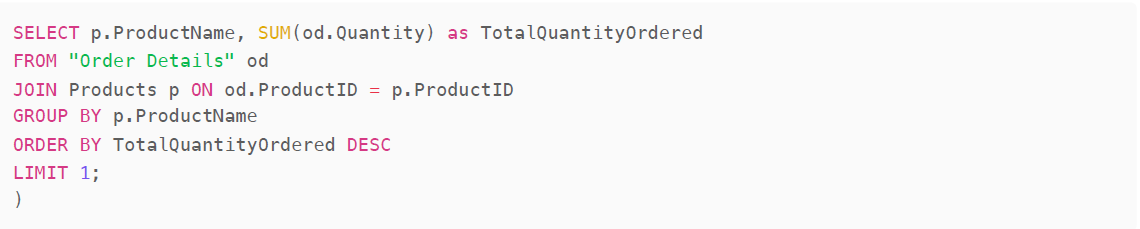

Agent SQL Query:

GPT 4o Agent Response:

"The top product by quantity ordered is "Louisiana Hot Spiced Okra" with a total quantity of 206,213 units ordered."

Agent SQL Query:

Whilst 4o gets this answer correct, 3.5T gets it all wrong - selecting the wrong column to summarise just as we saw back in question 1.

Q6. What is the top product by quantity ordered? and who is the top customer for the product?

This question extends the previous one to see if the model's can take the answer from one query and use it to answer a follow up.

GPT 3.5 Turbo

Agent Response:

The top product by quantity ordered is "Louisiana Hot Spiced Okra" with a total quantity ordered of 206,213. The top customer for this product is customer "B's Beverages" (CustomerID: BSBEV) with a total quantity ordered of 2,862.

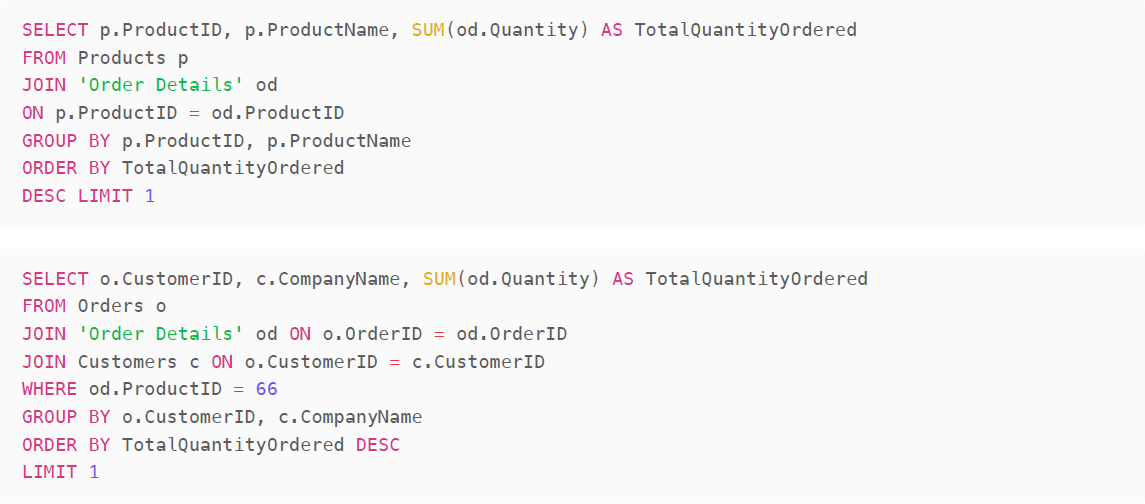

Agent SQL Query:

GPT 4o

Agent Response: The top product by quantity ordered is Schoggi Schokolade with a total quantity of 3310 units. The top customer for this product is B's Beverages (CustomerID: BSBEV).

Agent SQL Query:

Bizarrely, despite incorrectly answering question 6a, 3,5T this time generates the correct query to identify product 66 and then also correctly finds the top customer.

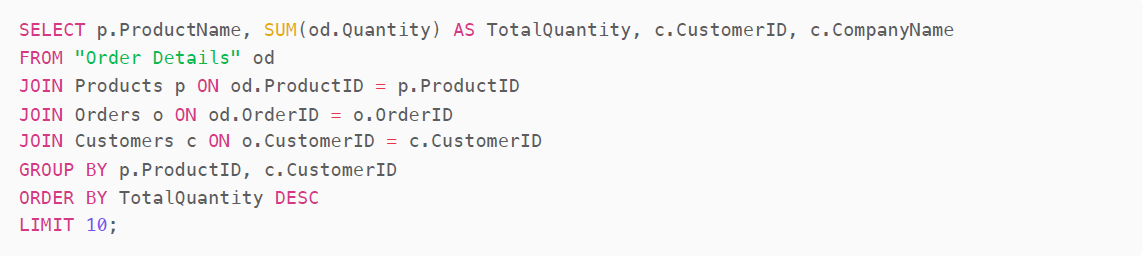

In contrast, the query generated by 4o is not what we're looking for. It returns the top Product and Customer combination, rather than the top Customer of the top Product overall. This answer is likely to be very dependent on the exact wording of the question.

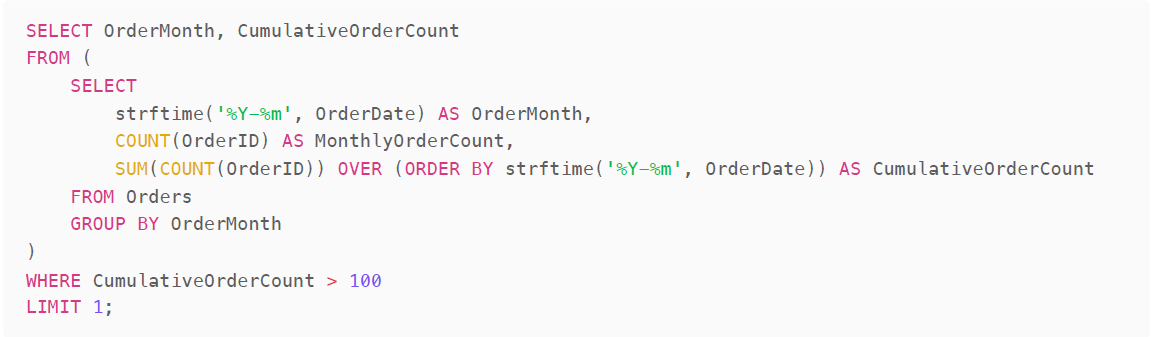

Q7. In which month did the cumulative order count surpass 100?

The aim of this question is to test the model's ability to generate queries with window functions

GPT 3.5 Turbo

Agent Response:

The cumulative order count surpassed 100 in multiple months. Here are the months where the cumulative freight surpassed 100...

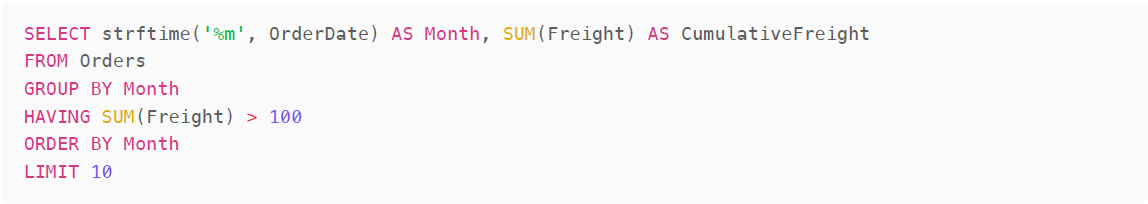

Agent SQL Query:

GPT 4o

Agent Response:

The cumulative order count surpassed 100 in August 2012

Agent SQL Query:

Again, 3.5T uses the incorrect column to summarise and appears to have ignored the 'cumulative' part of the question. 4o produces the required query using window function to identify the correct month.

Conclusion

Langchain is a powerful library that combines the power of LLMs with your database to produce impressive Text-2-SQL capability. Some key findings from this investigation were:

Model choice is key.

The two models we tested produced vastly different results. Whilst the newer GPT4o was more often the better Agent, we did find examples where its predecessor was preferable. The selection of model to use with Text-2-SQL tools is crucial and multiple models should be tested prior to rolling out such features in an enterprise environment. Text-2-SQL tools that do not allow for different models to be swapped in/out would not be advisable.

Detail is key

Avoid ambiguity in your questions. This means including as much detail about the scope of your questions as possible. For example, note the 'across all months' inclusion in question 4. Exclude this from the question and the models will fail to answer correctly. Unlike a human analyst, these models do not respond asking for clarification. Future advancements of these technologies will surely address this and allow for a fuller back-and-forth conversation between the user and the agent, but for now providing detail upfront is crucial.

Phrasing is key

The same question, phrased in slightly different way can lead to a different result regardless of the model used. When implementing Text-2-SQL tools work should be done to test a variety of different phrasings of the same question to ensure consistency in the model's answers. Doing this will allow 'phrasing guidelines' to be shared with users to ensure that their questions are being interpreted in the way they want them to be. This is no different to working with a human analysts.

An LLM-powered Analyst Agent is certainly a possibility for forward-thinking organisations. It's just one of many applications of Generative AI within the data and analytics space. (We've outlined others in this article).

Whilst we have focussed on open-source tools in this article, the principles and learnings will likely apply to any implementation of Text-2-SQL within data and analytics platforms. There are huge efficiency gains to be made from using this functionality - it can allow business users to interact directly with data and can super-charge your analysts to work more effectively. However, a successful implementation will need to be rigorously tested and training for users will be essential.

For more information or support with your AI journey no matter what tools you use, don't hesitate to get in touch with us