In the world of artificial intelligence, an unexpected revolution is underway. DeepSeek, a modest Chinese startup, has managed to shake up established giants such as OpenAI with its open-source R1 model. This bold move recalls the biblical story of David versus Goliath, where the smaller triumphs against all odds.

Origins and development: Opposing Trajectories

ChatGPT, OpenAI’s flagship, is the product of massive investment and extensive research. Trained on massive datasets, it embodies the epitome of modern computing power.

In contrast, DeepSeek, a Chinese startup founded in 2023 by entrepreneur Liang Wenfeng, has taken a more resource-efficient approach. On January 20, 2025, DeepSeek unveiled its R1 model, which rivals OpenAI’s models in reasoning capabilities but at a significantly lower cost. This approach allowed DeepSeek to offer a powerful alternative while reducing development costs.

Performances: A Fierce Struggle for Supremacy

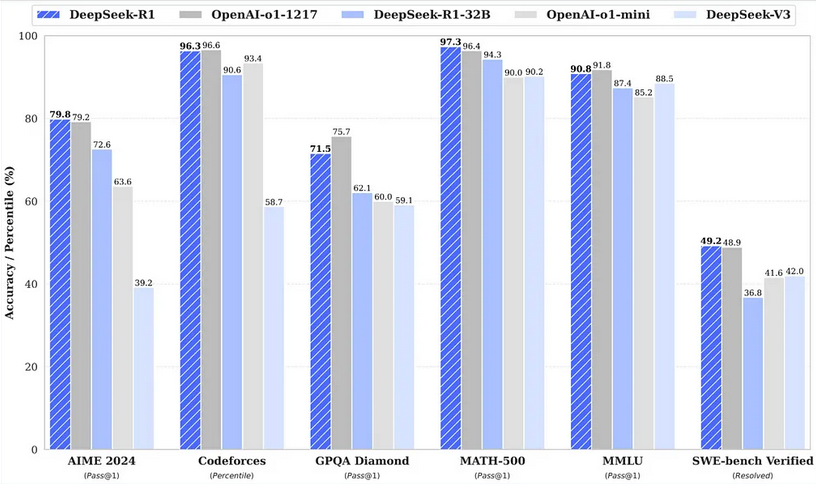

DeepSeek models demonstrate exceptional performance in various benchmarks, often outperforming their American competitors. For example, on AIME 2024, a test of advanced reasoning, DeepSeek-R1 scored 79.8%, ahead of OpenAI-o1-1217 (72.6%) and OpenAI-o1-mini (39.2%). OnCodeforces, which assesses programming skills, DeepSeek-R1 achieves 96.3%, well above comparable OpenAI models.

The MATH-500 model, which measures the ability to solve complex mathematical problems, also highlights DeepSeek-R1's lead, with an impressive score of 97.3%, compared to 94.3%for OpenAI-o1-1217. In language comprehension (MMLU), DeepSeek-R1 excels again with 90.8%, outperforming other models in the category.

Even in more technical tasks, such asSWE-bench Verified, which tests code verification, DeepSeek stands out with 49.2%, confirming its effectiveness against its competitors.

These results confirm the excellence of DeepSeek models in complex reasoning and programming, positioning the Chinese startup as a leader against industry giants.

Efficiency x Cost: An Economic Revolution

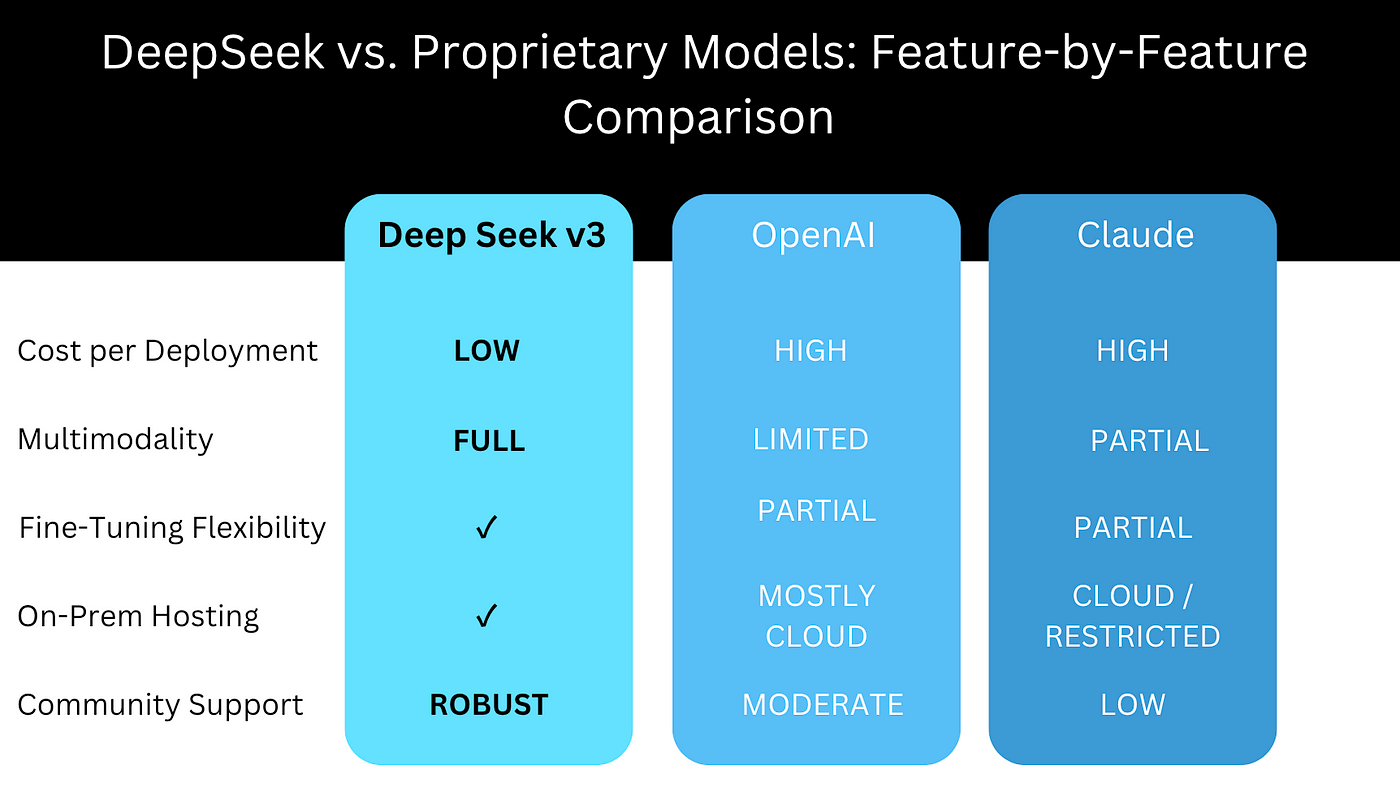

While models like GPT-4o require massive investments, DeepSeek is disrupting the industry with significantly reduced operating and training costs. The R1 model cost just$5.6 million to train, compared to several hundred million for GPT-4. This feat is based on innovative training methods and optimized use of resources.

In terms of operational cost, DeepSeek demonstrates impressive efficiency. For example, DeepSeek R1’s input and output costs are$0.55and$2.19per unit, respectively, compared to $2.50and $10.00for GPT-4o, and up to$15.00and$60.00for OpenAI-o1. This reduction in usage costs opens up considerable opportunities to democratize access to artificial intelligence.

Even lightweight versions like DeepSeek V3 outperform their counterparts in terms of cost-effectiveness, with input costs of$0.14and output costs of $0.28, far outperforming GPT-4-mini while maintaining competitive performance.

Transparency and accessibility: two opposing philosophies

DeepSeek has adopted an open-source approach, making the code for its R1 model accessible to all, thus fostering collaborative innovation on an unprecedented scale. This decision allows researchers, developers, and companies to customize and adapt the model to their specific needs, paving the way for unique developments in diverse fields such as medicine, education, or finance.

A prominent example of this philosophy is the success of derivative models such as DeepSeek-R1-Distill-Qwen-7B, which outperform the lightweight versions of competitors thanks to the flexibility and active community that open source offers. This approach also facilitates the emergence of local and regional initiatives, allowing developing countries to access advanced AI without relying on the costly infrastructure of tech giants.

In contrast, OpenAI’s ChatGPT is offered through subscription services, providing a controlled user experience but limiting external experimentation. The lack of transparency prevents users from understanding or improving the models, making them dependent on the company’s business strategies. This proprietary approach not only limits access but also stifles collaborative innovation, an area where DeepSeek excels.

DeepSeek’s transparency is all the more brilliant because it is part of a global trend towards the openness and democratization of cutting-edge technologies. It allows users to take control of AI and avoid the blockages imposed by closed models, thus helping to reduce inequalities in access to innovation.

Ethical Considerations: The Question of Censorship

DeepSeek’s R1 model has been criticized for its strict censorship of sensitive topics, particularly in China, such as issues related to Tiananmen or the private lives of Chinese leaders. This limitation is often seen as a necessary trade-off for operating in a restrictive regulatory environment while benefiting from the support of the Chinese government. However, its defenders point to the exceptional technical quality of R1, particularly in the areas of productivity, reasoning, and complex problem-solving.

By comparison, ChatGPT also has content moderation, but it is designed to encourage more open discourse, especially on global and sensitive topics. However, this moderation is not without its critics, with some users believing that OpenAI’s moderation algorithms introduce biases specific to their cultural outlook or corporate values.

The ethical aspect of these models raises a central question: to what extent should AI systems reflect local or universal values? DeepSeek offers unparalleled efficiency for practical applications, but its international adoption could be hampered by reluctance related to its cultural restrictions. For its part, OpenAI faces the challenge of balancing moderation, freedom of expression, and social responsibility.

This dichotomy highlights the complex ethical issues that AI players must navigate, reflecting the tensions between technological innovation, regulatory control, and user expectations in an increasingly interconnected world.

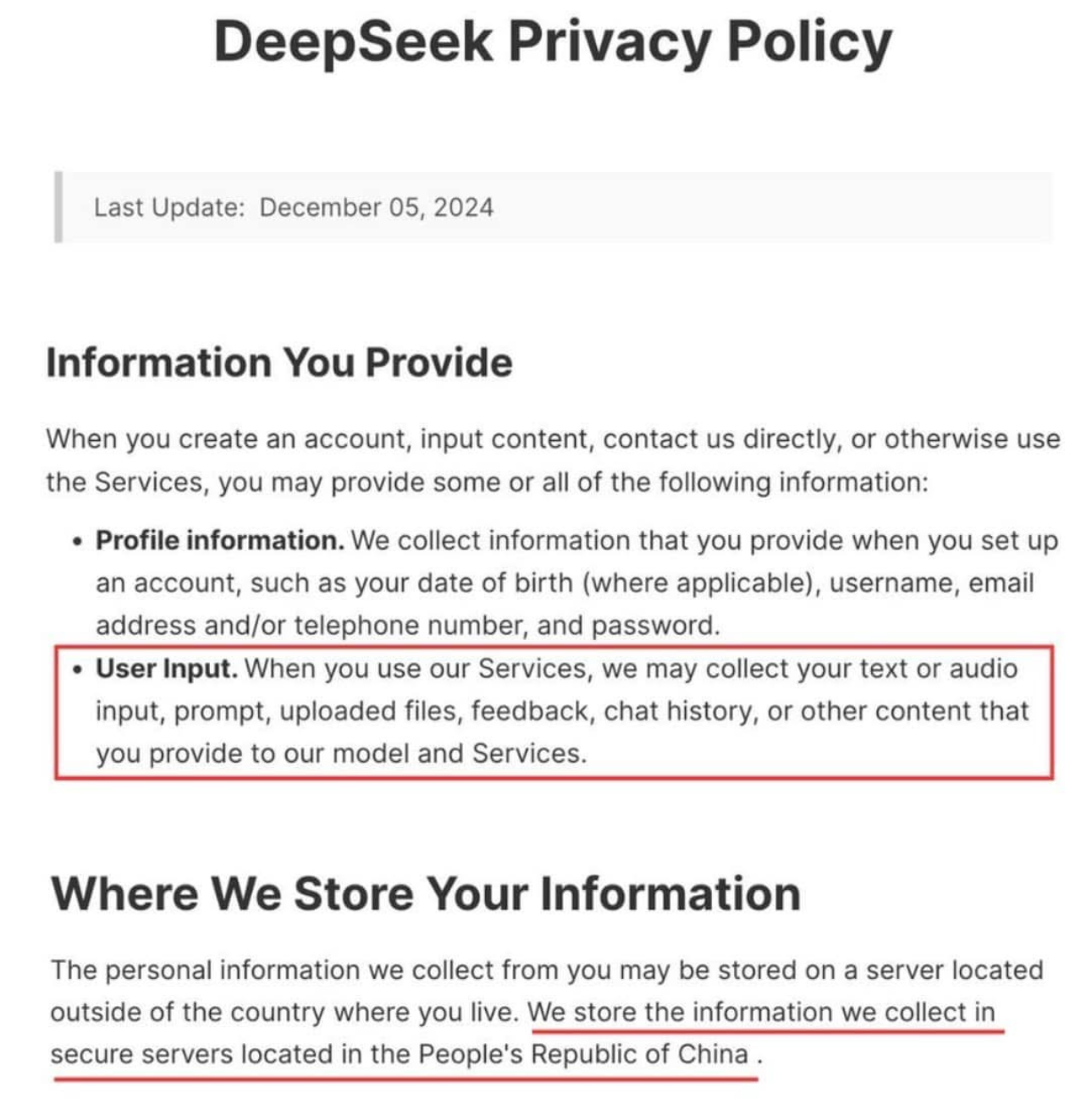

Pay attention to Deepseek's privacy policy!

DeepSeek's privacy policy raises concerns, particularly regarding the collection of sensitive data (profile, interaction history) stored on servers in China, subject to strict local laws. Unlike ChatGPT, which offers options such as incognito mode, DeepSeek lacks transparency on data retention and use, which may hamper its adoption, particularly in Europe.

However, its open-source approach allows for local deployment, giving users full control over their data, reducing risks, and ensuring compliance with regulations like GDPR. This flexibility makes it an attractive alternative for those concerned about privacy.

Market Impact: A Technological Earthquake

The emergence of DeepSeek has sent shockwaves through the AI market, challenging established giants and causing significant financial repercussions. For example, Nvidia saw its market cap drop by 12% after the release of R1, as this model drastically reduced reliance on expensive GPUs.

DeepSeek also unveiled a consumer chatbot app, number 1 on the App Store in the United States, directly competing with ChatGPT while being free. Its decentralized and economical strategy opens up opportunities for SMEs and emerging countries, while forcing a rethink of giants like OpenAI and Google.

Comparison Chart: DeepSeek vs. ChatGPT

Characteristic | DeepSeek (R1) | ChatGPT |

Origin | Innovative Chinese startup | OpenAI's flagship product |

Development Cost | About $6 million | Several hundred million dollars |

Approach | Open-source, full transparency | Subscription access, proprietary approach |

Performances | Complex reasoning, problem-solving, efficiency at lower cost | Proficiency in a variety of tasks, from writing to programming |

Ethical Considerations | Criticized for censorship on sensitive topics | Content moderation via template control |

Impact on the Market | Causes shockwaves, drives AI innovation and accessibility | Maintains a strong presence, facing increased competition |

Conclusion: A new era of artificial intelligence

The duel between DeepSeek and ChatGPT symbolizes an era of transformation in the field of AI. DeepSeek embodies bold innovation and cost efficiency, while ChatGPT represents established power and reliability. With models like R1, AI is potentially entering an era of abundance, promising technological advances accessible to all. It remains to be seen how the giants will react to this major disruption.

If you have AI issues or want to know more about AI, contact us!

Sources used in the article: