As any business intelligence consultant could tell you, user adoption can be the most difficult part of any report roll-out, especially if users encounter performance issues. One slow loading table can quickly turn a user’s experience from positive to negative. The best actions you can take to mitigate a situation like this are to set expectations and preemptively eliminate those performance pain points.

However, both of these actions require data on how your Power BI report will perform when 2, 10, 25, or 50 users are interacting with it. Report performance can often seem fine to the developer, but slows once enough end users gain access to it.

The exercise described in this article can help you understand and set benchmarks for user and server performance at any number of report consumers. In our use case, we tested user concurrency performance of a couple of Power BI report pages connected to a Tabular Model hosted on an Azure Analysis Service.

Every visual in a page within a Power BI report has a corresponding DAX query that is sent to the data model the report is connected to, so each chart, table, filter, etc. is a separate DAX query. When a single user opens a report page with six visuals and 14 filters, 20 DAX queries are being sent to the back-end engine to retrieve the relevant data for the visual.

Something to note in the testing in this exercise is that it’s only recording query response time, not the actual rendering time of visuals themselves. However, the duration of the DAX queries can provide us with an understanding of the response the average user can expect.

The tools that were used in this exercise:

DAX Studio: an open source software that can be used to design, run, and analyze DAX queries against services such as Analysis Services Tabular and Power BI Dataflows/Datasets.

Powershell: the tool that was used to trigger the DAX queries for the tests, simulating any number of concurrent users.

Azure Portal: the Azure monitoring site that was used during testing to gauge Memory and QPU Usage of the Tabular Models.

Methodology

We tested user concurrency with the following approach:

Connect DAX Studio to the Tabular Model using XMLA endpoints.

Run an “All Query” trace through DAX Studio on the instance/node.

Clear the cache of the model from DAX Studio.

In the Azure Portal, record the baseline Memory and QPU for the AAS instance.

Execute the PowerShell script for N users for a query set.

Save the results of the Query Trace (copy/paste them from DAX Studio).

For the query duration, record the peak Memory and QPU.

Wait until the instance returns to baseline before repeating the test with a larger number of simulated users.

Powershell

We developed two query sets: a complex one with six queries and a simple one with one query. Each query set corresponded to the large visuals on the two Power BI Report pages that we wanted to test. By testing two report pages of different complexity, we could see how performance would scale depending on that complexity. Each query set was tested against the same number of users, triggered via a Powershell script that followed the flow described below. The Powershell script used the “SqlServer” module in order to trigger the queries.

The Powershell script:

Connected to the AAS Tabular.

Read the queries in as variables from text files from the chosen query set.

Read possible filter values into an array.

Created a background powershell for each “user,” with each user running the query set for a different value of the selected filter.

The purpose of having each “user” run their query set with a different value for one filter is to prevent the Tabular model from caching the results of the query for the first user and re-using them instead of directly querying the model.

In a real-world scenario, this isn’t something that would be bad; caching can improve performance when a large number of users may be running the same query. However, for testing purposes, we need to see how many users can independently engage the models before performance becomes unacceptable. The filter that was chosen to be used for this purpose returned data volumes that were very close in size as to not skew results.

DAX Studio

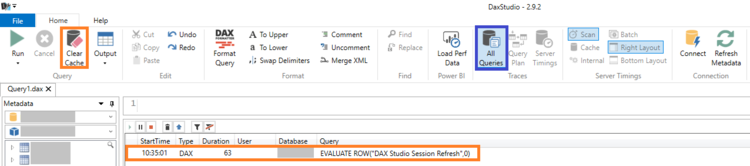

The two main features of DAX Studio that were a part of this exercise were “Clear Cache” and the “All Queries” trace.

Between each run of an increasing number of users, the cache was cleared to prevent the queries from previous tests from contaminating any future queries.

By using the “All Queries” trace, DAX Studio captures all queries that are executed on the Analysis instance that was connected to it, and provides statistics such as Start Time, Duration, the user that executed the query, the model, and the full DAX query.

Azure Portal

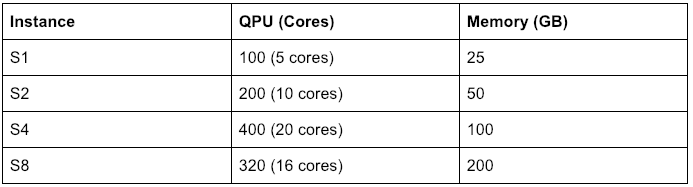

For the duration of the concurrency testing, the Metric monitoring portion of the Azure Portal was used to manually capture the baseline and peak values of two metrics: Memory and QPU. Each Azure instance tier has a corresponding amount of Memory (GB) and QPU.

The memory of the Analysis instance determines the limits that a query can hit before the instance crashes or produces an error. So by monitoring this, a test can get a sense of how many users can query the model before engaging that threshold.

Results

The data that was gathered was summarized into these metrics:

Total Query Duration: Total N users’ experience

Average Query Duration: Average user experience

Max Query Duration: Worst possible experience for user

CPU Variance: The compute cost of the N users

Memory Variance: The memory cost of the N users

The results that were collated led to a few conclusions on our part:

For Azure Analysis Services, bigger instance size doesn’t necessarily mean better performance. The data we saw indicated the average user response will reach a ten second threshold much more quickly on an S8 than on either an S2 or S4 instance.

The compute resources will typically hit the maximum between 10-15 users, though this isn't the best indicator of performance. Hitting the compute threshold doesn't necessarily lead to longer query execution times. In some cases it seems that the opposite occurs and an improvement in query times occurs when higher compute resources are measured.

Dataflows aren’t as performant with a large number of users as a Tabular Model. However, this could be due to several items, the most important being this feature is new and under development. There are some changes coming that Microsoft discussed at their Business Application Summit that could invalidate this point.

Overall, it’s important to note the small data sample of testing that is shown here. This process can be used as a guide to indicate what ranges the user experience should be. However, it is worth noting that the general trends of query response seen in the exercise were repeatable, while there may be some variation in the individual points between tests.

Conclusion

This type of testing can be used for a multitude of different purposes:

Comparing report performance with various numbers of users

Comparing user experience between different Azure Analysis Server sizes

Comparing concurrency performance between Analysis Services and Power BI Datasets built off of Power BI Dataflows (thanks to XMLA endpoints!)

Comparing the performance of a report connected to a model that you’ve made optimizations on and a report connected to the old model

This type of testing can be used in conjunction with other Performance features such as the Performance Analyzer Pane in Power BI Desktop, which can help isolate any performance issues that were noted over the course of these tests.