These independent LLM leaderboards provide an objective view of how today’s leading AI models compare across quality, speed, cost, and real-world capability. Together, they help cut through marketing claims and give a practical, data-driven lens for selecting the right model for specific business use cases.

Hopefully you get some clarity from seeing where your chosen model stacks up.

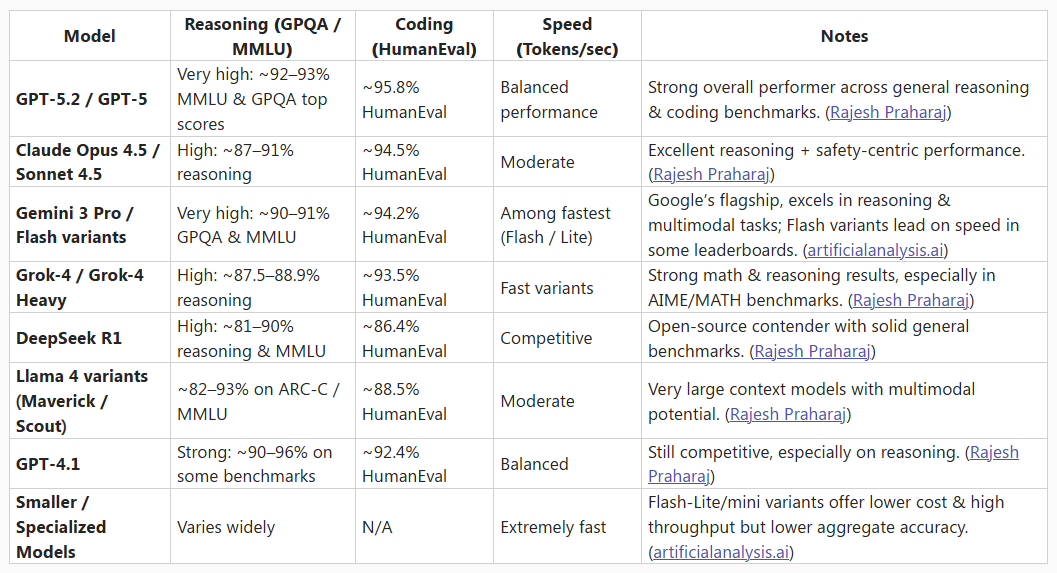

Several platforms now provide comprehensive comparative tables that rank large language models on standardized benchmarks (reasoning, math, coding, multi-task understanding), context window size, pricing, speed, and real-world performance. LLM-Stats offers a broad leaderboard with dozens of models showing benchmark scores, cost, and context capabilities across tasks like MMLU and HumanEval, useful for side-by-side comparisons of OpenAI, Anthropic, and open-source models. ArtificialAnalysis.ai presents a detailed leaderboard of 100+ models that includes metrics such as intelligence scores, latency, and token costs to help evaluate speed and efficiency alongside accuracy.

The Klu.ai LLM Leaderboard synthesizes multiple indicators (quality, cost, speed) into a unified index to highlight which models balance these factors well for different use cases. Other resources, like LLMYourWay.com, aggregate hundreds of thousands of individual benchmark results to compare model performance across many axes, and platforms such as Lambda’s LLM Benchmarks and Hugging Face’s leaderboards provide additional views into accuracy and inference characteristics.

Leaderboard URLs

LLM-Stats (general leaderboard)

—

ArtificialAnalysis.ai LLM Leaderboard

—

Klu.ai LLM Leaderboard

—

LLMYourWay Benchmarks

—

Lambda LLM Benchmarks Leaderboard

—

Hugging Face Open LLM Leaderboards

—

https://huggingface.co/collections/open-llm-leaderboard/the-big-benchmarks-collection

__________________________________________________________________________________________

If you are looking for a solution that can incorporate any of these AI models explore the allmates.ai through a FREE trial.