Enterprise organisations continue to face significant challenges with managing, maintaining, and driving value from their data. This is down to a diverse variety of factors, including accessibility issues, expanding information volumes, disparate data sources, increasing complexity, and a lack of suitable skills, knowledge of technology, and data quality.

During the last decade in particular, the exponential growth of big data has made its management even more complicated and unwieldy. In response, businesses are prioritising the need to effectively unify and consistently govern data environments. For their strategies to succeed, they must ensure that their data enables them to remain both resilient and adaptable, to cope with rapid and extensive change (for example, from Covid, the global energy crisis, climate change, societal challenges, and inflation).

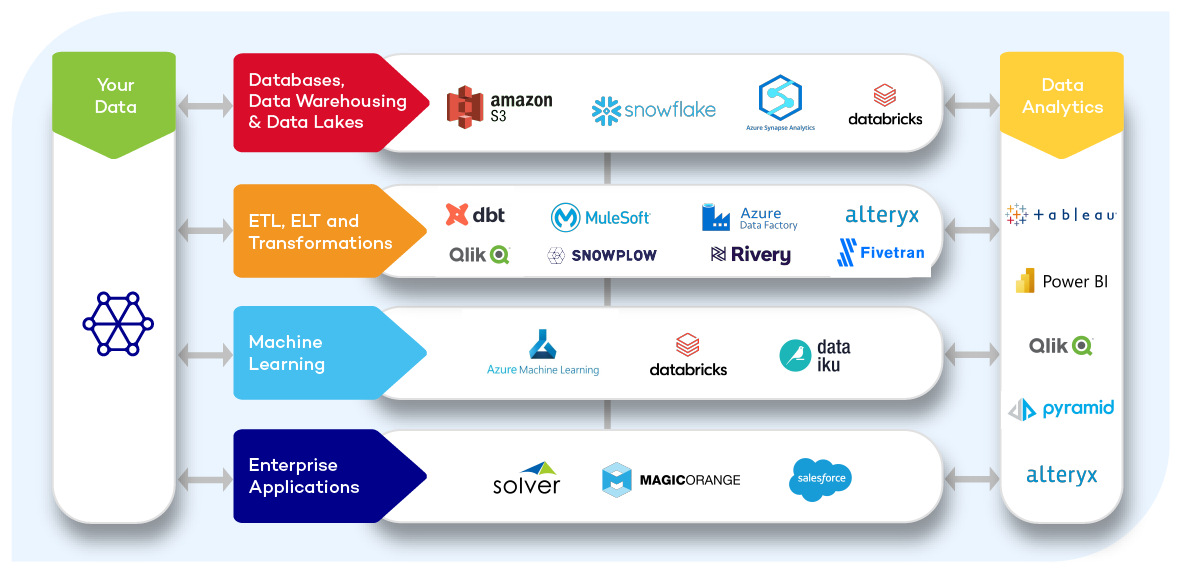

To address these requirements, the ideal solution is to construct a tailor-made data fabric: a dedicated data management design architecture that connects data sources with the entities consuming that data, such as applications, reporting tools, AI/ML algorithms, and so on.

The principles and benefits of Data Fabric: Download Whitepaper

Data fabric: what is it, and how does it benefit your business?

According to Gartner, a data fabric is defined as “an emerging data management design that promises to reduce the time to integrated data delivery through active metadata-assisted automation.” It is made up of an integrated layer (a fabric) of data and the connecting processes surrounding it.

In this way, it brings together all data from across the enterprise, from its multiple sources and applications, thereby preventing it from being limited or constrained by any single platform or tool. It is not meant to replace existing systems, but rather, to make them interoperable.

Data fabrics support a combination of different data integration styles and leverage metadata, knowledge graphs, semantics, and machine learning to augment data integration design and delivery. Although the concept is still gaining traction, it has captured the attention of organisations around the world for its clear potential to solve their complex data management dilemmas.

Significant benefits

Once deployed, a data fabric can immediately and significantly reduce manual data integration tasks, across the board, while becoming the common platform to handle data from multiple data and application sources and deliver it to its consumers to support the various needs of the organisation.

Ultimately, as well as unifying these disparate data systems, it also embeds reliable governance, strengthens security and privacy, and makes data more accessible to those that need it.

Monitor and manage your data and applications, regardless of where they reside.

Enjoy integrated data architecture that’s adaptive, flexible, and secure.

Drive more holistic, data-centric decision-making.

Gather data from legacy systems, data lakes, data warehouses, databases, and apps to create one holistic view of business performance.

Increase fluidity between data environments and make all data available across the enterprise.

The principles underpinning an effective data fabric

In its conception, a data fabric follows a set of clear and consistent guidelines to deliver these benefits:

It uses advanced connecting capabilities to interact with all data storage systems and applications/platforms, wherever they are located (on-premise, in private or public clouds).

It enforces the collection and storage of all metadata, providing domain-specific context descriptions and technical storage details to make data reusable and promote interoperability.

It facilitates or automates the construction and execution of complex data transformation pipelines whenever possible, using new tools and advanced technologies like Natural Language Processing (NLP) and knowledge graphs.

It is composable by design and its components can be selected and assembled in different combinations to deliver evolving needs.

It is future-proof and software-agnostic, to guarantee its long-term lifespan and ensure it adapts to technological and regulatory developments.

How does it work?

The underlying premise of data fabric is about the smooth flow of information from source to target. Along the way, a series of key business decisions are made, using pre-defined, automated processes. This automation provides an intelligent mechanism for metadata to deliver the insights needed to intelligently integrate this information in a meaningful way.

This design starts with discovery: finding the interesting connections and patterns in the metadata that will enable this to happen. These show how people are working with people; people are working with data; which combinations of data are being used, which are being rejected and where they are all being stored; how final data is communicated and how frequently; and so on.

Alongside, a review of existing data management infrastructure and technology is carried out to assess its capabilities and maturity: in other words, what you can realistically expect it to achieve today, and what you need to do to ensure it continues delivering what you need it to for your data fabric, going forward.

These combined results determine which products and capabilities will be needed to make the data fabric work best for its particular organisational requirements.

What value does it deliver to you?

Data fabric’s biggest value proposition is the fact that it can augment and automate many data management tasks. This value can only increase as data pipelines grow in size, volume and complexity with data platforms evolving continually.

In turn, its capability will help data management employees to work more productively, by managing more tasks in less time and freeing up capacity to focus on higher value tasks1.

The benefits for those closest to data management are clear. Business analysts can get closer to the data integration process, by being able to view specific datasets that are ready to be used for sensitive or personal information, to optimise performance. Data engineers can do more with fewer resources and time, based on empirical evidence rather than ‘gut feel’, regarding insights about which engine to use, when to push down, when to virtualise, and so on. And senior data and analytics leaders can experience a faster turnaround time to realise their analytics projects by enabling efficient and governed self-service and reduced IT bottlenecks.

How Keyrus can help

Keyrus is your trusted partner for building sustainable, high-performing data architectures. Using our extensive and deep expertise in designing and creating data repositories, we can partner with you and your business to ensure that you receive a platform capable of meeting your data demands and delivering unique value to your stakeholders, employees, and customers.

We leverage insights and knowledge extracted from raw data to discover patterns and relationships; then identify ways to improve your business in the areas you have chosen for review and analysis.

Keyrus is ready to help you design an actionable data strategy that will deliver your business objectives and drive commercial success. Contact us at sales@keyrus.co.za

1 Source: Source: Top Trends for D&A, 2022: https://www.gartner.com/document/4020801?ref=solrAll&refval=357220395